I’ve been frustrated with Twitter for a while. One thing is the obvious, pervasive censorship, but quite another the relentless self-promotion of Elon Musk, claiming to stand up for ‘free speech’, which he obviously does not.

And though the first question on that account might logically be related to why he acquired Twitter… the actual question is -

How does it align with the broader agenda?

Let’s start by reverting back in time to the days of Twitter 1.0 when people were banned for non-offences - merely because they were inconveniencies to the broader narrative. Because what took place back then was that those banned people… went elsewhere. And that led to a boom with the alternatives; primarily Gab1, Gettr2, and Telegram3. All experienced an effective explosion in user growth and traffic, as people were forcibly driven away from the increasingly censurious mainstream platforms - Twitter and Facebook, especially.

But in January, 2022, Elon Musk signalled an interest in acquiring Twitter, before he tendered the offer in April. He then signalled that he would pull out in May4, filed a lawsuit in July, but finally deciding to go ahead with the acquisition, accepting the - somewhat pricey - $44bn price tag, supposedly because he was threatened with a $1bn lawsuit should he bail, which… did appear a tad odd at the time, because just about absolutely everyone knew that bots were a serious issue back then, and certainly far more than 5% of active account were no more legit than, say, ‘el0nmusk69’.

And having been on the receiving end of censorship for years, I personally cherished the thought and registered an account - because while he certainly did make noise receptive to my libertarian ears, all the insufferably hypocritical, left-wing mainstream media papers all took turns, pushing their odious5 but nevertheless fully expected6, continuous7 lies8. That alone would have convinced a fair few, I’m sure.

But - without seeking to spend too much time on this - for whatever reason, Elon decided to hire9 an10 obvious11 hack with WEF12 ties as CEO, and almost immediately thereafter free speech was throttled13 … though obviously by ‘accident’ -

‘'This, they said, is part of their “speech not reach” policy,' he added, referring to one of the new tenets of Musk's self professed Twitter 2.0, which involves restricting the reach of tweets that violate their policies instead of outright pulling them.‘

But we all knew. And gradually, impressions withered away. Yet, in April, 2024, the Washington comPost released an article on Elon’s slight legal matter in Brazil - ‘Having remade Twitter, Elon Musk takes his speech fight global‘14. And it’s such an odd, odd article, because though the headline suggests a somewhat positive outlook, the text itself immediately goes on to launch an attack -

Then there’s Musk, the combative tech billionaire who, since taking over Twitter, has loosened the platform’s restrictions on hateful content and allowed misinformation to flood the platform in the name of free speech‘.‘

Lies, of course, but either way - all claims of ‘hateful speech’ should be backed by legislation. What these should absolutely not be is interpretative matters for the courts to decide - because those are ripe for abuse. And that, typically, is the express intent. The EU, for instance, has no legal definition of what ‘hate speech’ entails - yet, you will absolutely be prosecuted for breaking this… opaque arbitrariness15.

‘Musk remains a target of Moraes’s investigation, according to a Supreme Court official, …. That probe goes beyond X’s content moderation policies into whether Musk is part of an organized threat to the country’s democracy‘

It’s interesting phrasing, because what it suggests is that the lack of express content moderation leads to ‘our democracy’ being ‘endangered’… in spite of history concluding the almost exact opposide; it really is no coincidene that authoritarian regimes curtail free speech almost immediately. The point here is to create a Ministry of Truth, dictating what is allowable expression… of which an example could be, say, Nina Jankowicz16, the now former ‘executive director of the United States Department of Homeland Security (DHS)'s Disinformation Governance Board’.

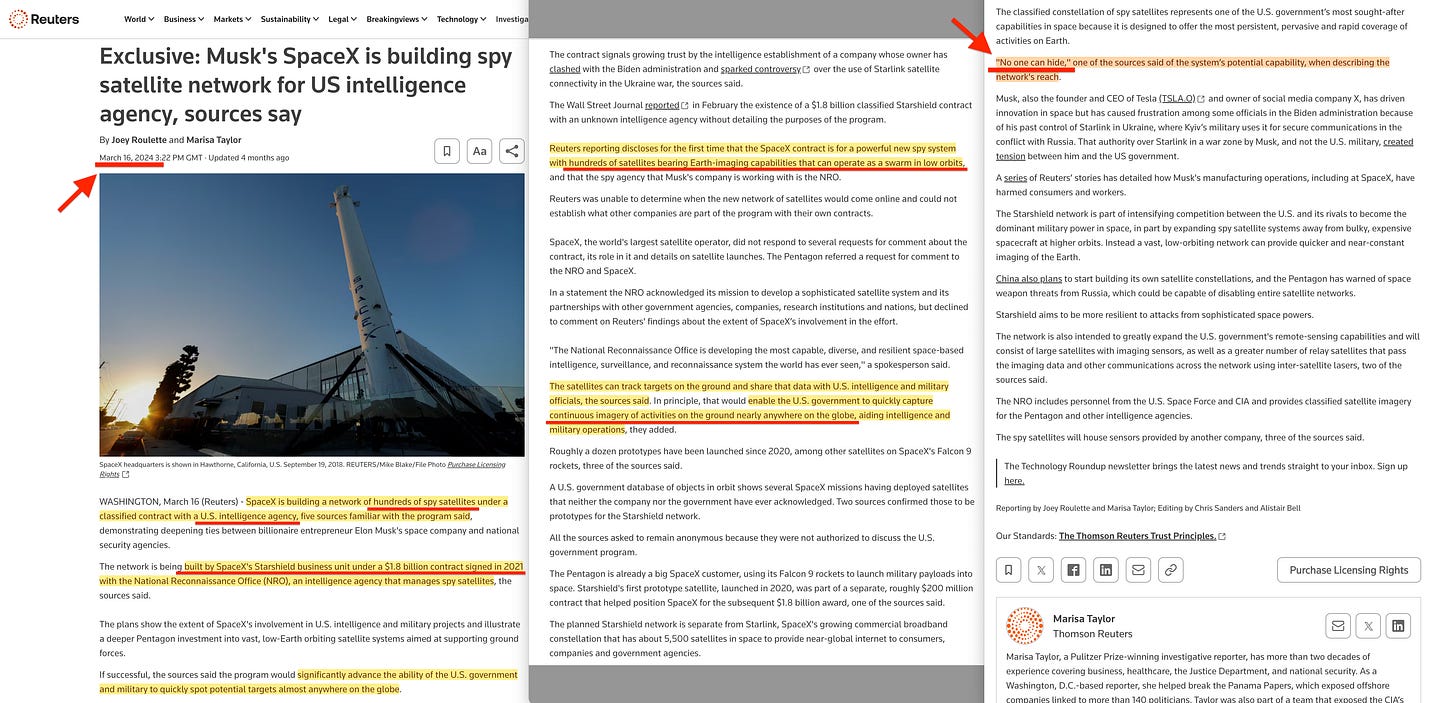

But though I personally for months battled the idea that there could be more to the story, my belief that Elon being clear as the driven snow conclusively burst in March, 2024, as Reuters (and others) reported that ‘Musk's SpaceX is building spy satellite network for US intelligence agency‘17

The network is being built by SpaceX's Starshield business unit under a $1.8 billion contract signed in 2021 with the National Reconnaissance Office (NRO), an intelligence agency that manages spy satellites‘‘

I’ve spent quite a bit of time documenting global surveillance, and through that process of discovery, this didn’t appear out of the realms of possibility -

‘"No one can hide," one of the sources said of the system’s potential capability, when describing the network's reach.‘

… because due to my prior discovery of the 2019 Canberra Declaration, and a 2018 press release out of Surrey Satellite Systems announcing live streaming from satellites, I had arrived at that very same conclusion. I was just waiting to find the initiative. The technology was absolutely capable - and suddenly, there it was… delivered the very man, promising ‘free speech’.

This led me to start digging through every bit of statistics I could gather, because while I had personally observed a continuous, steady decline in activity, I didn’t quite realise the gravity - nor even conceptualise a likely hypothetical. My initial take of the situation centered around a subversive daily reduction in impressions…

… but upon later revisit, I realised that my impression count was, essentially, peaking at just short of 5m impressions over any 12 week period, never to cross… which by pure coincidence would suggest that the deployed strategy actually was to artificially keep my account below the threshold required for monetisation18 - not that this ever was the intent.

… this would then by logical deduction lead to Twitter hand-picking the ‘correct’ accounts showcasing the ‘right attitudes’ being rewarded the right of monetisation. And there’s a name for that - that’s a social credit system.

Sure, that doesn’t mean I suggest everyone who derive their income from Twitter is ‘in on it’, nor do I suggest that those who do monetise, will self-regulate in return for a steady income - I would never make a such blanket claim, but I’m sure some do, ever-so subtly. And though you might argue that even if I’m right, this system is merely a ‘light’ one - which though fuctually correct, is fundamentally missing the point - because it still very much is one. Thus, this would be the thin end of the wedge, hence it would be only a matter of time before they very gradually would introduce the less palatable rules, one by one19.

But others20 picked up on this implication as well21.

A particular topic covered with interest on this substack is that of ethics - global ethics - which I have repeatedly stated is not only ripe for abuse, but even detailed how this initiative gradually burrowed its way through the various international organisations, starting with ICSU’s SCRES initiative in 1996 regulate scientists internally through ‘ethics disclaimers’, before UNESCO COMEST picked up the torch in 1997, regulating external communications.

It’s not ripe for abuse by accident, but by express intent. And a more mainstream contemporary ‘ethics declaration’ is Hans Kung’s ‘Towards a Global Ethic’, released at the 1993 Parliament of the World’s Religions.

As for Elon Musk…? Well… he’s actually been entirely consistent on the topic of AI regulation, because in 2014 he stated that acting without one was akin to ‘summoning the demon’22, and in 2015 he (and others, including Stephen Hawking) likened it to an arms race23. And those statements did strike me as a tad odd back then, because public AI at the time essentially amounted to advanced expert systems… and very little else. And the pivotal paper leading to ChatGPT… well, that was released in 201824, making for a rather odd timeline.

And that resonates with another oddity which upon first encounter really bothered me, though I didn’t yet quite grasp why - the ‘High-level expert group on artificial intelligence‘25, because while the group was established in June, 2018 (in line with the paper above), their very first puzzling deliverable was…

‘Ethics Guidelines for Trustworthy AI‘

And Elon Musk - he26 banged27 that28 drum29 repeatedly30, did31 he32 ever33. And one noteworthy quote of his from back then34 -

‘Musk said there was need for a regulator to ensure the safe use of AI‘

Because that call reminds me of Dan Shefet, who in 2013 sued Google, forcing them to remove links due to an obscure French law passed only a few years prior; ‘The Right to be Forgotten’ - an event which set in motion the creation of an ‘internet ombudsman’ in France35, and ultimately - internet censorship.

Elon’s rhetoric has on occasion breached into the rather alarmist levels36, but what’s of further interest - beyond calling for a related federal department37 - his company also launched a ChatGPT competitor, Grok38, which very strangely - considering the man tours the world on the back of alleged free spech and a champion of the ‘right’ - turned out the most left-wing39 of an already socialist lot. Surely, that would be the very first thing he’d test… and not whether it loves sarcasm.

But Elon Musk40 is just one voice to call for AI governance. Others41 have42, too.

But this call appears perhaps a tad inconsistent, because the man calling for a regulatory body on the topic of AI… doesn’t always appear to behave in a particularly43 ethical44 manner himself. And as for his claims relating to ‘an illegal, secret deal’45… that appears a tad hard to believe, considering rather a lot of us feel his oppressive censorship expressed through ‘free speech not reach’ on a daily basis.

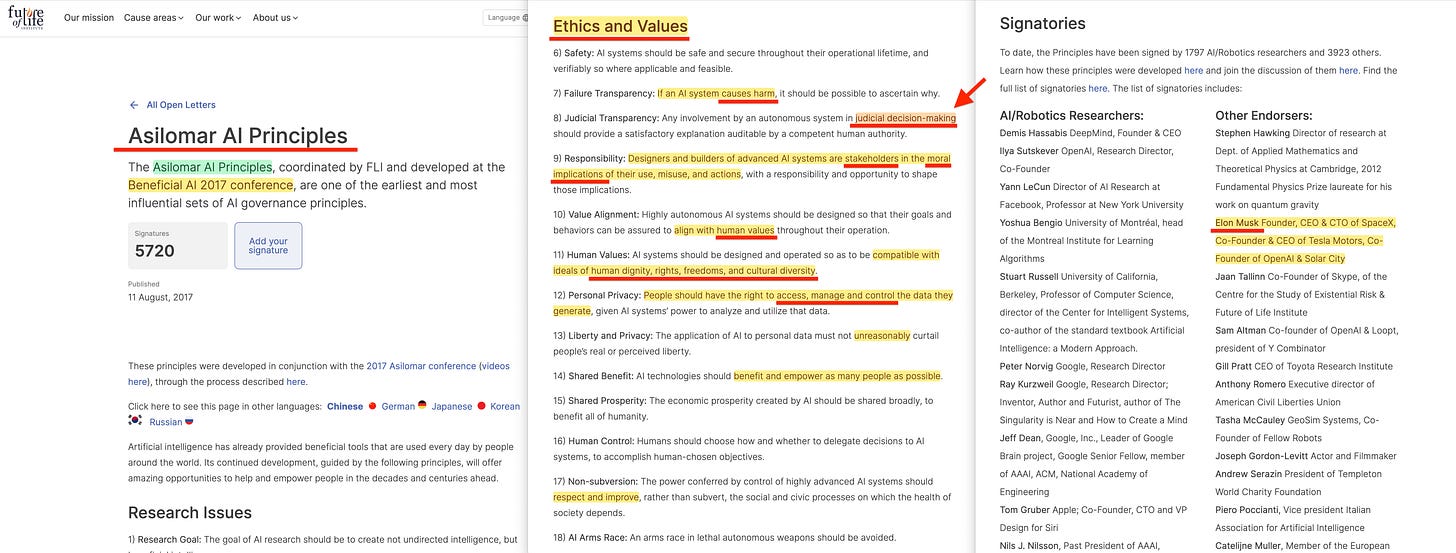

And I hear you say - regulatory body doesn’t mean ethical declaration. Well, not so fast. Because the ‘Beneficial AI 2017‘ conference saw the release of the ‘Asilomar AI Principles‘46, and these really leave little doubt. Not only do principles 6-18 focus explicitly on ‘Ethics and Values’… but Elon himself endorsed the document.

And should you locate the conference website you can indeed confirm that not only did Elon participate on a panel at the event… he co-funded the event itself47.

And while Musk of course is a household name due to Tesla and SpaceX, he also operates a company named Neuralink48, and they specialise in a type of Brain/Computer Interface - ie, a BCI.

… and guess what - BCIs49 will50 also51 be52 subjected to the same arbitrary ‘ethics’ we see absolutely everywhere - in fact, the topic is so common it even has its own term - neuroethics.

In 2019 ASU News published an interview53 with Andrew Maynard of their Risk Innovation Lab, who went on to state that -

‘Neuroethics extends well beyond the “do good” and “do no harm” we expect of medical science though and encompasses how we might use neurological technologies in other areas. For instance, how do we decide what the appropriate boundaries are for developing brain-computer interfaces that connect us to our smartphone, or enable users to engage in a new level of online gaming, or even to enhance their intelligence?‘

… not only can BCIs be used to enhance intelligence, but we don’t actually know where to set boundaries in that regard - and that’s somewhat of an issue, as neuroethics go beyond traditional medical ethics.

‘… there are many more ways of helping ensure ethical and responsible approaches to innovation are integrated into new technologies, including the technologies that companies like Neuralink are developing. These include listening to and collaborating with experts…‘

… thus putting you at the mercy of those ‘experts’ who so awesomely called that alleged pandemic… but perhaps I’m being a bit unfair here -

‘But they also require tech companies to proactively work with organizations such as the World Economic Forum and others that are developing new approaches to responsible, beneficial and profitable innovation‘

Oh wait, on second thought…

What this all means is that as BCIs become increasingly more powerful (and safe), people will request these implanted, and they will thus become a sort of intellectual doping - and those with said implants will outcompete anyone failing to follow suit... in this arms race. You’ll in effect be forced to get one, simply because your competitor has one - or you won’t be able to compete, and thus your kids will starve. And that, by logical extension, then means that they will become as ubiquitous as the mobile phone. And that’s a problem as these all will require integrating with ‘neuroethics’ - a topic already firmly in the crosshair of UNESCO54…

… and UNESCO also happen to have separate initiatives relating to Ethics of Artificial Intelligence55, Lifelong Learning56, and Global Citizenship Education57.

These are all connected, because as Carlos Torres (of UNESCO) informs us in ‘Global Citizenship as a new ethics in the world system’ from 201858, this initiative is framed in social justice, ethics and morality, a new global consciousness based on human rights and universal values, employing a new lifelong learning perspective.

Consequently, Global Citizenship Education and Lifelong Learning are based on a system of ‘new ethics’; Global Ethics59.

Further, UNESCO released the report ‘International conference on Artificial intelligence and Education, Planning education in the AI Era: Lead the leap: final report‘60 in 2019, which details that -

‘A common AI competency framework is needed for both teachers and learners‘

Which seems fair, of course - how can they communicate if they don’t possess a similar skillset, but -

‘… reskilling and upskilling of existing workers to equip them with the necessary AI skills can involve an agile and modular approach to enable continuous lifelong learning‘

… for whose benefit is that exactly; the learner, the worker… or the state -

AI in education should position ethics at its core, making it essential to establish an ethical framework‘.‘

… oh wait, silly question - it’s to the benefit of those who dictate the ‘ethics’.

‘It is critical to employ a humanistic approach to leverage the potential of AI to achieve SDG 4. There was a consensus among participants that education systems should continue focusing on investing in human intelligence, rather than focusing on those aspects that are likely to be automated with machine intelligence. The humanistic approach views AI as a tool to augment human intelligence and expand the boundaries of human capabilities.‘

Some jobs will be automated, and instead of trying to compete against machines we should instead employ the educational resources in an effort to expand the boundaries of human capabilities. And though this document doesn’t explicitly refer to BCIs, with sufficient societal uptake it’s really just a matter of time before it’s pushed on kids ‘for the greater good’.

Too far-fetched I hear you say61?

‘Neuro-technologies, with its rapid development, and its capacities to understand and intervene our brain, are promising avenues to improve the well being of people, considering the high incidence of mental health issues all over the world. However, to deliver for good, they need to be framed ethically, particularly when dealing with the human-computer interface, and the threats to our mental privacy and autonomy derived from this interaction‘

No, it really isn’t. This is very much happening at this very moment.

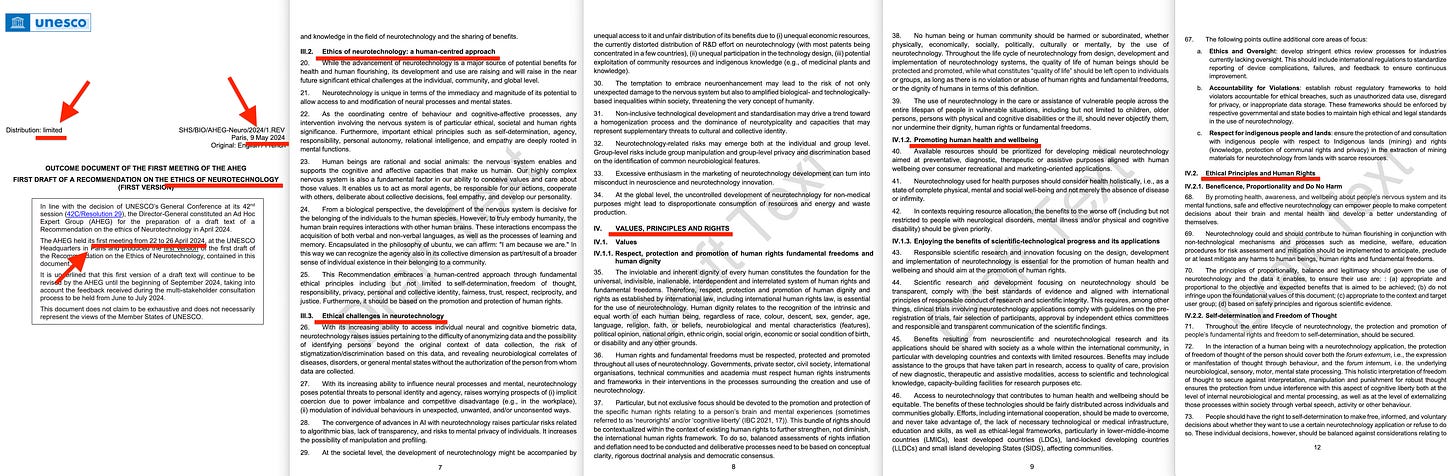

And an ‘Outcome document of the first meeting of the AHEG: first draft of a Recommendation on the Ethics of Neurotechnology‘ was the result of an April 22-26, 2024 meeting at UNESCO’s headquarters in Paris. I won’t cover in detail, but - whether you like it or not - this is real, and no amount of sticking your head in the sand will change that. And what did they discuss?

The ethics and challenges thereof, the values, principles, rights; we have human health and well-being, and we have ethical principles and human rights. And all of this in the context of the ‘Ethics of Neurotechnology’.

Wake up. We are most certainly not in Kansas anymore.

May the 22nd, 2024 - now, that was an impactful day. See, that was the day the ‘Tech Secretary unveils £8.5 million research funding set to break new grounds in AI safety testing‘62. Of course, you never found out about it, because on that very same day, Rashid Sanook63 called the 2024 UK General Election64.

The core initiative saw its launch the day prior65… but that coincideded with BS protests at American Universities66, but further...

… the Seoul Declaration67, with bonus ‘multi-stakeholder’ ‘International Cooperation on AI Safety Science‘, and ‘the recently updated OECD Al principles‘…

… which by absolute chance were updated mere weeks in advance.68.

And before summing up my thoughts, allow me to show you just two more links. We have the ‘IEEE PROJECT 7000 - Model Process for Addressing Ethical Concerns During System Design‘69 submitted on the - yes - 21st of May, 2024 , but we also have the ‘ISO 59000 family of circular economy standards‘70.

The good news is that the former was only just approved. It’s scheduled to complete within two years. What does that entail? Simple. It means that in two years, ‘human well-being’ will become a set of values, ready to be plugged into the fully automated adaptive management digital twin.

Yes, in only two years, a fundamental set of indicators, dictating whether your ‘aggregate well-being’ is satisfied will be ready to be plugged into the AI-based General Systems Theory component of Adaptive Management. And as for all those claims of ‘good governance’ and so forth - they will absolutely lie about everything related rather than have a legit conversation about this topic.

Should you commit the mistake of actually reading the document, it soon becomes pretty clear that this isn’t about the the ‘policy maker’... or the ‘end user/citizen’. This is about the corporate first and foremost, given that they quite literally couldn’t even be bothered to spend more than 10 seconds describing beneficiaries outside of the corporate, filling both in with buzzwords as opposed to putting in actual effort - meaning that it is clearly the corporate who butter their bread - it’s to them they sell. And unless the corporate can make a quid out of selling your children to a vaccination centre, they couldn’t care less. Incidentally, neither they would, having collected their $20 bonus total from GAVI post-vaccination (Jeremy Heimans, Purpose Campaigns, 2008; 'Development Finance in the Global Economy: The Road Ahead').

I recently wrote this article on Jeffrey D Sachs, where the commonality of his activities undoubtedly can be established through -

Global Governance through Global Ethics.

And Elon’s summary somewhat builds on that, because while his efforts to build the global surveillance infrastructure, providing citizen science (social media) and tools for satellite surveillance (information theory), the acquisition of Twitter itself could be used to access the data to ultimately train AI (general systems theory).

The purpose of this censorship could be speculated to be the creation of a public discourse clearinghouse, where Overton Window approved topics are greenlit, and wrongthink is punished, thus providing an opportunity to test a ‘light’ social credit system which will so obviously be rolled out, post carbon currencies.

And this in some ways is a crazy thought, because a society covertly controlled through arbitrary ethics is the express opposite to claimed intent relating to the acquisition of Twitter in the first place.

-

So allow me to summarise and theorise…

The outright banning or throught blatantly falsified ‘fact checks’ silencing of voices critical to the narrative led to significant growth in alternate social media platform, generally outside the sphere of control of the intelligence community.

Realising that seeking to impose the same set of ‘values’ on those platforms would only result in yet another move - potentially one outside the sphere of direct Western control - a more effective counter was necessary.

Musk - in agreement across ‘climate change’ and ‘net zero’ - acquired Twitter, promoted on basis of alleged ‘free speech’. This solved the issue relating to growth of alternate platforms.

Subtly however the course was changed, and subversive measures were introduced, centred around the idea of promoting approved speech, and throttling anything speaking to the counter.

To stop big follower accounts from objecting, these were offered financial compensation - yet, eventually had to surrender all personal information to a company outside American jurisdiction.

To stop smaller, yet growing accounts from receiving same offer of renumeration, they modified the ‘algorithm’ behind the scenes, ensuring they never crossed the critical 5m/12 wk threshold.

This strategy would then progressively self-amplify, leading to a situation where they would in effect progressively control the narrative through reducing people’s feeds to tweets from accounts expressing alignment.

However, this strategy still proved lacking on occasion, and more overt methods were introduced to throttle factually-correct-but-inconvenient speeech, through blanket bans on tweets gaining traction, the reintroduction of bots to divert traffic, and continuous statistical analysis ensuring incredibly artificial likes-to-retweet ratios, where 1.5:1 appears common. Secondary strategies were applied, such as ensuring virtually no outside visibility when users of the same wrongthink mindset communicated.

This strategy, they hoped, would not only stop people from leaving the platform, as even the most problematic posters would on occasion see a tweet on the cusp of going viral… provided the tweet itself did not express wrongthink.

An information clearinghouse is an organisation typically inserted close to a central power structure, which seeks to apply a filter on information. And while the argument goes that this is to ‘weed out misinformation’, it’s an organisation more than ripe for abuse. It’s the very first place to corrupt, should you seek to influence a decision making process, because through the strategic filtering of an information stream you can control and thus subversively affect the political process - and this we clearly witnessed during the alleged pandemic.

But even with said politicians being fed a steady stream of lies courtesy of the ‘information clearinghouse’, there’s still the issue of public debate - and that’s where the role of social media becomes critical, as these in many ways have become the global, public debate centre of choice. Grievances, sports, celebrities, politics, … even falsified science, pushed through for political expedience - these debates take place on social media sites. But as Ellen Pao’s Reddit began filtering ‘allowable opinion’ for absurd reasons justified by dubious, one-directionally upheld claims of ‘hate speech’, this practice soon spread to other social media sites, with Twitter 1.0’s early initiative being somewhat of a showcase, having been drafted by 40 civil society organisation (most probably funded by Soros), and of which not a single one representing the traditional, conservative point of view. And we now stand at a point in time where Washington Post is celebrating censorship, clearly not grasping the pivotal importance of free speech in a democratic society.

But as it was soon discovered that throttling or outright banning accounts through constructed lies relating to ‘missing context’ or obviously fraudulent ‘fact checks’ has a serious flaw as these lead to people taking their illegal opinions elsewhere, the United Nations and its satellite agencies started to drum up the idea of ‘media literacy’71 education, which fundamentally comes down to instructing people through rhythmic repetition to only trust those very same MSM outlets which lied continuously during the alleged pandemic.

But brainwashing the youth to only ‘critically’ question… well, anything relating to traditionalism, and with a background set on a continuous capability growth of Artificial Intelligence, the social media strategy could be altered, instead shepherding all dissenting voices to a single site, thus - at least temporarily - solving the issue of alternate platform growth. And all these dissenting voices on this single site could further be - very conveniently - used to train an AI, which ultimately failed to realise its own overcorrected ‘ethical’ bias, thus becoming the most left-wing AI of an embarrassing lot, while at the same time subversively and very progressively acting to silence that very same alternate voice through the effective creation of a user social credit score, financially rewarding those with palatable opinions, and punishing those pushing dissenting voices through subversive censorship aka throttling.

What I say is that Twitter is a subversive public discourse clearinghouse, utilising a (soft… for now anyway) social credit system, which boosts the narrative of expedience. And which narrative would that be? Oh that’d be the one brought to you through…

Global Ethics.

Twitter 2.0 is not as bad as Twitter 1.0… it is far worse, as it not only deliberately seeks to subversively silence dissenting opinion, but further makes a deliberate attempt to implicitly stop you going elsewhere through Elon’s incessant lies regarding promises of free speech (but only if you live in Brazil, I guess). Oh, and while you’re there - your wrongthink opinion will also be used for sakes of censoring you and those harbouring the same ‘ethically incorrect’ opinions in the future, through training the most left-wing mainstream AI on the planet. And Twitter would then further curtail access, ensuring Grok would be best at… acting against the collective interests of those returning, post acquisition.

But we’re not quite done. Because Neuralink’s efforts relating to BCI - which will so obviously be gradually imposed upon the people - it will first be promoted as an implicit financial carrot (you’ll outcompete if you do), then an imperative (you’ll lose out if you don’t), and eventually an implied demand (we won’t hire you unless). And - obviously - should you run afoul some attached arbitrary ‘ethics declaration’, your access can be restricted at any point, ensuring your compliance.

And as this progressively is rolled out through enterprise, prices will decline - ultimately to become as ubiquitous as the mobile phone - which it will likely eventually eliminate. And by then, the insertion will have become so mainstream, and so safe, that it will be progressively pushed on continuously younger generations - which is why UNESCO is all over this topic.

And as for Neuralink - their technology will be under the very same legal obligations as enterprise (ie the World Economic Forum) through ESG, education (UNESCO) through principles of SDG 4.7 (Global Citizenship), morality (ie, the Vatican) through Laudato Si, and global governance (United Nations)…

… and that common obligation, dictating every part of this super-structure is ethics.

Global Ethics for Global Governance.

The star agent, pushing this concept… well, Jeffrey D Sachs kind of sticks out.

Elon’s angle.. I guess that would have to be modified slightly. Global Ethics for Global AI Governance. And as I’ve already sourced these above, I’ll use ChatGPT to summarise.

And as for AI Safety, all I can say is… May the 22nd.

Whether this from Musk’s side is deliberate or not - I leave that up for you to decide. I personally think it’s rather long odds, but either way - it kind of doesn’t matter. Because while we waste time debating individuals, the show goes on72.

The ultimate objective is to wrap the world in ‘ethics declarations’, or ‘codes of conduct’… which then are derived from said, arbitrary ‘ethics’. And given that they’ve arrived at the level of the Supreme Court of the United States, I don’t think we have much time to waste, quite frankly73.

The future looks great… provided you get to dictate what said ‘ethics’ should entail, because these will gradually be rolled out through every aspect of society. And while a system based on a legal framework defines what we CAN do, that is no good if you seek to create a mechanical super-organisation based on ‘theoretical biology’ and applied, purposive science, destilled through normative ethics, rolled out through pragmatic organisation, ultimately for surveillance aka empirical observation to monitor. And this global surveillance will then - through interpretation - lead to applied science and converted into normative ethics, completing the cycle.

And though the primary driver may often appear to be business - not forgetting that the ‘stakeholder approach’ model in fact originated through corporate planning - that fundementally fails to factor in that business also ultimately are subjugated to… ethics. And this, they don’t control.

The contemporary purpose is Sustainable Development. This is in part carried out through the Fourth Industrial Revolution, and the primary, related facilitating concept in that regard is Adaptive Management.

And Adaptive Management comprise Information Theory and General Systems Theory, ie, global surveillance and Digital Twin modelling. Both of these involve computational strategies, ultimately leading to Computational Sustainability, the idea of which is to ‘deploy algorithms, models, policies and protocols to improve energy efficiency and management of resources, …‘74, or ‘Computing and information science can — and should — play a key role in increasing the efficiency and effectiveness in the way we manage and allocate our natural resources…‘75, alternatively to develop ‘computational and mathematical models and methods for decision making concerning the management and allocation of resources…‘76.

… but - sure - there was no explicit mention of automation of the controlling aspects of Adaptive Management, which broadly consist of cybernetics (external), and resilience (internal, self-organisation). But we are definitely moving in that direction77 - the full computerised automation of society, step by step.

Computational Sustainability further has empirical subsets including epidemiology78, agriculture79, climate science80, urban planning81, energy systems82, water management83, and even ecology84.

We also have pragmatic computational social science85, even computational propaganda86 - which could well include ‘computational models for detecting hate speech on social media’.

Empirical, pragmatic… wait does that mean - yes. Here’s an example through normative Computational Ethics87 -

‘Moral judgment is complex… Humans often overlook relevant factors or become confused by complex interactions between conflicting factors. They are also sometimes overcome by emotions, such as dislike of particular groups or fear during military conflicts [145]. Some researchers hope that sophisticated machines can avoid these problems and then make better moral judgments and decisions than humans.‘

I recall seeing a range of what I believed to be sensationalist claims, related to transhumanism, and AI running the world. And even now, a part of my brain refutes the idea that somehow, advanced AI is essentially being trained as we speak - ironically by those who disagree with it the most - and that society is progressively being shifted away from a foundation of legal frameworks constituting what we are allowed to do, and towards a set of global ethics, stating what we should do. A move, which further appears somewhat in line with the field of computing itself, where you typically operate to a strict purpose.

But the clincher really is that even those ethics, set to rule us all, will be generated through computational efforts. And that this isn’t as far fetched as you’d like to believe… well who better than Elon Musk to explain88 -

‘At the World Government Summit in Dubai, Musk argued that to avoid becoming redundant in the face of artificial intelligence we must merge with machines to enhance our own intellect.‘

Because that leads us to Elon Musk’s very own operation focusing on the development of a Brain/Computer Interface (Neuralink), which - like every other entity in the world soon enough - will be controlled through ethics.

On a final note - we have the empirical, pragmatic, and normative expressed as computational. That leaves just the purposive. Well, with that I can’t help you, but I can however point out that all of this leads in one unmistakable direction.

Scientific Socialism.

Thanks, great research. Grok (Twitter/X's AI program) replied when asked, "What is the role of the Bio-Cyber Interface Human Physical layer in 6G?":

The role of the bio cyber interface in the context of 6G is to facilitate a seamless integration of the human body with the digital world, enabling advanced applications and services that were previously unimaginable. This integration is achieved through the deployment of nano-nodes, and the establishment of a Medical Body Area Network (MBAN), which allows for the transmission of data and information within the human body, using the terahertz band, particularly beneficial for intra-body, nano networks and in vivo wireless nano sensor networks (iWNSNs).

The bio cyber interface is part of the broader Internet of Bio-Nano Things (IoBNT) architecture, which aims to create a network of interconnected nano-devices within the human body, enabling applications such as real-time health monitoring, targeted drug delivery, and even cognitive enhancement. This network is supported by the 6G technology, which provides the necessary infrastructure for high speed, low latency communication between these nano devices and external systems.

Thank you for highlighting the arrogance and complete lack of ethics of this fiend.