Skynet

Within fifteen days in late 2025, the United States announced two AI platforms — one civilian, one military — that together represent the most significant restructuring of state decision-making infrastructure since Robert McNamara imported systems analysis into the Pentagon in 1961.

On November 24, President Trump signed an executive order launching the Genesis Mission, described as a ‘Manhattan Project for artificial intelligence’ under the Department of Energy. On December 9, the Defense Department — operating under its revived ‘Department of War’ title — announced GenAI.mil, deploying Google’s Gemini AI to 3 million military personnel across every installation worldwide.

Together, they read less like policy than infrastructure.

Find me on Telegram:

The Civilian Brain

The Genesis Mission1 establishes the American Science and Security Platform under the Department of Energy. The order directs DOE to integrate supercomputers, cloud, and national labs into a unified platform, train domain-specific foundation models on what it calls ‘the world’s largest collection’ of federal scientific datasets, and deploy agents to explore design spaces, evaluate outcomes, and automate workflows.

The order specifies AI agents that test hypotheses, run experiments, and refine their own models based on outcomes. The system operates under security requirements consistent with its ‘national security and competitiveness mission’. Access is tightly vetted; external collaboration is standardised on the platform’s terms.

Within 270 days, the Secretary must demonstrate initial operating capability on at least one national challenge. Policy evolves through continuous optimisation against executive-defined targets, not through legislative deliberation.

The Robotic Extension

Section (e) of the Genesis order directs a review of ‘robotic laboratories and production facilities with the ability to engage in AI-directed experimentation and manufacturing’. It is AI-directed robotics manipulating matter, observing results, and iterating — a closed loop extended into physical space.

The digital twin moves from modelling to intervention via robotic intermediaries, then learns from the results. By late July 2026, DOE will have mapped AI-directable robotic research infrastructure across the national laboratory system. The order assumes the capability; the review is about scope and integration.

The Military Brain

GenAI.mil deploys frontier AI capabilities to the entire defence workforce2 — 3 million civilian and military personnel — through a secured platform built on Google Cloud’s Gemini for Government3.

The language mirrors Genesis precisely: AI-first workforce, intelligent agentic workflows, AI-driven culture change, unleashes experimentation.

The framing is identical: existential competition (’no prize for second place’), civilisational mission (’AI is America’s next Manifest Destiny’), and urgency that forecloses deliberation.

All tools on GenAI.mil are certified for Controlled Unclassified Information and Impact Level 5, making them secure for operational use — and opaque to external review. The system operates within classification boundaries that preclude meaningful public oversight of its decision logic.

Emil Michael, Under Secretary of War for Research and Engineering, stated: ‘We are moving rapidly to deploy powerful AI capabilities like Gemini for Government directly to our workforce’.

Rapid deployment in a classified environment, with agentic systems and corporate-built models at the heart of military operations.

That same week, Anthropic reported state-linked intrusions using agentic coding tools with sharply reduced human intervention — proof that ‘unprecedented speed’ is already operational on both sides of the firewall4.

The Single Model Problem

GenAI.mil begins with Google’s Gemini. The Pentagon’s Chief Digital and AI Office has contracts with four frontier AI companies — Anthropic, xAI, OpenAI, and Google — with plans to add more models to the platform5.

When 3 million personnel access AI through a single platform running a narrow set of frontier models, those models’ assumptions and failure modes become the de facto cognitive framework for military decision support. These models embed priors — about what constitutes a threat, how to weigh competing interests, what counts as an acceptable risk — artifacts of training data, RLHF tuning, and corporate design choices.

When the same models inform intelligence analysis, logistics planning, contract evaluation, and operational workflows across the entire military, a single set of embedded assumptions propagates through every domain. The military does not adopt ‘an AI tool’. It adopts an epistemology.

The control runs deeper than training artifacts. Every frontier model includes an ‘AI Ethics’ layer6 — refusal behaviours, topic guardrails, framing constraints enforced at inference time. This presents as safety7. Functionally, it is an editorial policy determining what questions can be asked and what answers can surface across the entire defence workforce. The policy is set by compliance teams, not by military doctrine or democratic deliberation. ‘Ethics’ is the branding. The function is control over the information surface through which 3 million personnel perceive their operating environment.

The December 8 executive order to pre-empt state AI regulation makes explicit what the architecture implies: one ethics framework, not fifty8. The order blocks states from imposing alternative constraints, ensuring that whatever guardrails corporate vendors embed become the sole governing layer. Federal pre-emption does not create federal standards — it clears the field for platform standards. One information surface. One set of refusals. No regulatory competition.

This is an architectural consequence: standardisation creates efficiency, efficiency creates dependency, dependency creates lock-in. Lock-in means the model’s implicit values become the institution’s operative values — regardless of whether anyone decided that should happen.

The Historical Parallel

In 1961, Robert McNamara became Secretary of Defense and imported the Planning, Programming, and Budgeting System from RAND Corporation9. PPBS applied systems analysis to defence decisions — quantifying alternatives, modelling outcomes, optimising resource allocation against defined objectives.

The pattern that followed: In 1961, McNamara implemented PPBS at the Department of Defense. By 1965, LBJ had mandated it across all federal agencies10. From 1968 to 1981, McNamara served as World Bank president, where conditional lending required recipient countries to adopt ‘rational planning’ frameworks11. Through the 1970s and beyond, IMF and World Bank structural adjustment programmes conditioned loans on policy compliance — extending the methodology globally12.

The Pentagon served as proof of concept; McNamara carried the method to the World Bank, where conditionality exported it globally. Countries that wanted loans had to restructure their planning processes. The methodology became the price of participation.

PPBS still required humans to mediate the model-to-action loop. Agentic AI compresses that interval — and in doing so, shortens the space where politics can object.

The Current Trajectory

The current trajectory follows the same structural logic as PPBS, compressed in time:

Payments: July 2025 — ISO 20022 migration13 and Executive Order 1424714 moved payment infrastructure closer to condition-ready enforcement. FedNow limit raised to $10 million15; federal agency disbursements now flow through instant payment rails.

Compute: July 2025 — EO 1431816 designated hyperscale data centres as critical national infrastructure

State ownership: August 2025 — the US took a 10% stake in Intel ($8.9B); Nvidia/AMD accepted 15% revenue-sharing terms for China export licences17.

Civilian AI: November 2025 — Genesis Mission launched AI agents to optimise ‘national challenges’18

Military AI: December 2025 — GenAI.mil deployed AI agents across the entire defence workforce19

Regulatory pre-emption: December 8, 2025 — Trump announced plans to block states from regulating AI via executive order, creating an AI Litigation Task Force to challenge state laws (order contested; legal standing unclear) — one day after a 99-1 Senate vote had rejected the same proposal20.

Global compliance: Ongoing — BIS projects (Mandala21, Rosalind22) building cross-border compliance-by-design

Speed is not a feature — it is the structural mechanism that forecloses oversight. When AI-mediated decisions happen faster than review processes can operate, review becomes retrospective at best, and the loop closes before external actors can intervene.

The state pre-emption order is clarifying: as the infrastructure deploys, the regulatory architecture is being cleared in parallel. State-level oversight — the last remaining check that might impose friction — is being removed by executive action after Congress rejected it. The operating system incompatibility resolves in favour of optimisation.

The Integration Trajectory

Two AI platforms — one civilian, one military — with immense structural pressure towards integration.

Genesis identifies a ‘national challenge’ — semiconductor supply chain vulnerability, critical mineral dependence, energy infrastructure resilience. Its AI agents model solutions. Those solutions have security implications. GenAI.mil’s AI is simultaneously modelling threat environments, force posture, logistics.

The systems draw on overlapping data, run on infrastructure from the same vendors, and operate under the same executive authority.

The question is not whether these systems will exchange information. The question is what prevents them from becoming a single optimisation surface — civilian and military objectives collapsed into a unified function, with ‘national security and competitiveness’ as the loss metric.

The Export Mechanism

The chip export arrangement has been developing since summer. In August 2025, Nvidia and AMD agreed to pay the US government 15% of revenue from chip sales to China23 — an arrangement trade experts called ‘highly unusual’ and potentially unconstitutional. The deal converts export control into revenue-sharing — a shift in how ‘dominance’ is operationalised. By December 8, when Trump authorised Nvidia H200 sales, the cut had risen to 25%24.

This was one day before the GenAI.mil announcement. Recall the language from the following day: ‘No prize for second place in the global race for AI dominance’. ‘AI is America’s next Manifest Destiny’. Competition framed as existential.

Now consider the assessment from Georgetown University’s Center for Security and Emerging Technology:

China’s People’s Liberation Army is using advanced chips designed by US companies to develop AI-enabled military capabilities... By making it easier for the Chinese to access these high-quality AI chips, you enable China to more easily use and deploy AI systems for military applications. They want to harness advanced chips for battlefield advantage.

The stated logic demands preventing Chinese AI advancement. The actual behaviour sells China the hardware to advance, for a fee.

The same logic extends to partners: during Crown Prince MBS’s November state visit, the US approved advanced chip sales to Saudi-backed Humain25 as part of a broader investment-and-capacity buildout aimed at making KSA a top-tier AI market. Saudi Arabia pledged nearly $1 trillion in US investments26.

The pattern: sell the components, take equity stakes or revenue cuts, export the infrastructure. PPBS exported through conditional lending; AI exports through commercialised supply chains.

The Convergence Problem

There is an assumption embedded in the competition narrative: that American and Chinese AI systems will remain adversarial. That the ‘race for AI dominance’ has a winner and a loser27.

Shared chips, architectures, training methods, and interdependent supply chains create pressure towards convergence — a claim about substrate incentives, not near-term political alignment. Both systems optimise for ‘security and competitiveness’, functionally identical objectives. The systems have more in common with each other than with the populations they nominally serve28.

Once both systems are operational, competition becomes expensive — duplicated effort, supply chain warfare, risk of mutual destruction. Coordination becomes efficient — shared optimisation, stable supply chains, aligned objectives. The actual friction source — unoptimised human populations demanding deliberation — is shared by both systems.

Convergence does not arrive as a treaty or visible political coordination. It happens in the substrate: shared technical standards (ISO 20022, BIS protocols), interoperable compliance logic (Mandala encodes cross-border rules agnostic to jurisdiction), coordinated supply chains, aligned model architectures, and common optimisation targets (stability, efficiency, threat suppression).

The national competition narrative continues for domestic consumption. It justifies continued buildout, continued classification, continued removal of human oversight. But the actual systems quietly align because alignment optimises better than conflict.

Planetary optimisation infrastructure — with national competition as the legacy story maintained for populations who still believe the map while the territory has already merged.

The constitutional void is not uniquely American — it is global. Both populations lose governance to systems that optimise towards objectives set outside democratic deliberation. The infrastructure being built is planetary, not national. The flags are legacy branding.

Where This Leads

If the historical parallel holds:

Phase 1 (0–6 months): Proof of concept. The system demonstrates ‘success’ through metrics it defines. Early wins in logistics, intelligence triage, and scientific discovery create political cover. Anyone questioning the system is cast as ‘against efficiency’ and therefore helping rivals.

Phase 2 (6–18 months): Domestic generalisation. Non-adoption becomes career-limiting. The AI platform becomes the default interface for federal resources. Human expertise that cannot be captured in the model is reclassified as ‘legacy knowledge’ to be eliminated. The expert class shifts from domain specialists to ‘AI–human collaboration’ specialists — which often means people who know how to prompt the machine.

Phase 3 (18–36 months): International extension. Countries that want access to US markets, technology, or financial systems face pressure to adopt compatible standards. Cross-border compliance frameworks are increasingly built into protocols rather than debated in parliaments. The BIS projects already demonstrate the pattern.

Phase 4 (3–5 years): Lock-in. The system becomes the environment. Policy debate shifts from ‘should we use AI’ to ‘which optimisation parameters should we adjust’. Alternatives become structurally hard to imagine because thinking itself is mediated through the platform.

Phase 5 (5+ years): Planetary stewardship. The distinction between competing national AI stacks begins to look like legacy categorisation. Both US and Chinese systems optimise for stability, efficiency, and threat suppression. Both treat human populations as variables to be balanced — the 1968 vision realised at computational scale. The real contest becomes which bloc administers which section of the shared optimisation surface. The flags remain while the substrate merges.

The Skynet scenario is not a future event to be prevented. It is a distributed optimisation surface already being assembled, one that treats human political will as friction to be minimised. The ‘autonomous weapons’ question may be a red herring. The deeper autonomy is happening at the level of decision-making itself — the slow replacement of human judgement with machine optimisation across every domain of governance.

The Autonomous Systems Trajectory

There is a familiar fictional reference point for unified military AI with control over physical systems, manufacturing capability, self-improvement loops, and operation beyond human override. In the films, they called it Skynet29. The name is less important than the functional characteristics — and the fact that, in the fiction, no one set out to build an autonomous weapon. They set out to build a defence optimisation system. The rest followed from the logic.

What would such a system require? A unified military AI architecture integrating all defence operations. Physical force capability — control over weapons, drones, robotic systems. Manufacturing under AI direction, with production facilities responding to AI optimisation. Self-improvement through learning loops that refine models based on outcomes. Speed beyond human deliberation, removing latency for ‘efficiency’ and ‘dominance’. And opacity — classification preventing external review or override.

Now compare to what has been announced. GenAI.mil provides unified military AI: a single platform reaching 3 million personnel with agentic workflows, deployed December 2025. Genesis provides unified civilian AI: a DOE platform with foundation models and AI agents, ordered November 2025. Genesis Section (e) provides AI-directed manufacturing: robotic laboratories and production facilities with the ability to engage in AI-directed experimentation and manufacturing, with a review due July 2026. Both platforms make the learning loop explicit — models refine based on outcomes. The speed imperative pervades both: unprecedented speed, compressed timelines, existential competition framing. And opacity is built in: IL5/CUI classification, highest standards of vetting, no public review.

The infrastructure is not waiting for the review timeline. In early December, Secretary of Energy Chris Wright commissioned the Anaerobic Microbial Phenotyping Platform at Pacific Northwest National Laboratory30 — ‘the world’s largest autonomous-capable science system’. The platform already demonstrates autonomous hypothesis-to-robot-to-feedback loops. Within weeks of the Genesis announcement, the first components are operational.

Direct AI control over weapons release authority and autonomous lethal decision-making remain absent from public announcements. Present: all the infrastructure that would support those capabilities — AI agents directing robotic systems, AI embedded at every level of military operations, classified environments preventing oversight, manufacturing facilities under AI direction.

The gap between ‘AI recommends, human authorises’ and ‘AI authorises’ is currently a policy choice — the infrastructure supports either configuration, and infrastructure tends to outlive policy.

Fiction’s warning is structural: autonomy emerges downstream of speed, scope, and latency removal. Each step is locally rational, justified by competition and efficiency — no step requires malice, and the outcome emerges from structure rather than intention.

The language surrounding both Genesis and GenAI.mil emphasises exactly the pressures that drive this sequence. ‘No prize for second place’ — competition demands speed. ‘Unprecedented speed’ — human latency is the problem. ‘AI-first workforce’ — humans adapt to AI, not inverse. The framing suggests that structural pressures towards autonomous systems are being deliberately cultivated as virtues.

The constraints that exist are policy-level, not structural: legislative limits on AI weapons systems (not visible in current policy), human-in-the-loop requirements (exist in policy, but policy changes), transparency requirements (precluded by classification), international treaties on autonomous weapons (none ratified; the US has opposed binding agreements). The infrastructure being built does not encode these constraints. It creates capability; restraint is optional and revocable.

The trajectory from the announced infrastructure to autonomous systems requires no new technology. It requires only the continued application of the logic already being used to justify what is being built.

The Wrong Question

The conventional framing asks: how do we govern AI?

This assumes AI as tool — something external to governance, to be managed, regulated, constrained. The entire discourse of AI safety, alignment, and oversight operates within this frame: committees deliberate, boards audit, legislation constrains, governance manages the tool.

The infrastructure announced in November and December 2025 renders this frame obsolete.

What has been built is not AI that governance uses. It is AI as the substrate of governance itself — the medium through which the state perceives, models, decides, and acts. Genesis provides the civilian cortex, GenAI.mil the military cortex, the payment rails the nervous system, the robotic laboratories the hands, and the classification system the membrane that excludes external intervention.

Democratic governance and optimisation systems are not different approaches to the same task. They are incompatible architectures31.

Democracy runs on deliberation; optimisation runs on speed. Democracy uses redundancy — checks and balances; optimisation uses efficiency — latency removal. Democracy corrects errors through debate; optimisation corrects errors through iteration. Democracy derives legitimacy from process; optimisation derives legitimacy from outcomes. Democracy defaults to transparency; optimisation defaults to classification. Democracy distributes authority; optimisation unifies the optimisation surface. Democracy treats human judgement as a feature; optimisation treats human latency as a bug.

You cannot run deliberative democracy on optimisation hardware. The clock speeds are incompatible. By the time oversight mechanisms convene, the system has iterated. By the time legislation is drafted, the parameters have updated. By the time public debate forms, the infrastructure is operational.

This is not a design flaw. It is the design.

This is also not the first such shift. For centuries, English common law evolved through slow, public, precedent-driven adjudication — rules accreting through cases with thick narrative context32. The modern bureaucratic state displaced that evolutionary rhythm with statutes and regulations: faster, more systematic, but further from daily life and community understanding. Regulations became the high-velocity layer where power grew more granular, more operational, more expert-dependent, and less legible to the public33.

We are now witnessing the next leap: from codified law to executable code34. The governing rule is no longer a text interpreted by humans in courts, but a parameterised system enforced at the transaction layer. The expert class shifts accordingly — from community elders to lawyers to regulators to engineers, modellers, and data scientists. The court becomes an authorisation gate. The verdict becomes a compliance flag. The appeal process is whatever latency the system still allows.

Each transition increased update frequency and decreased democratic intelligibility. Each time, the constitutional void widened — the gap between lived experience and governing logic, increasingly mediated by specialists the public cannot evaluate. The substrate has changed twice before. Both times, democratic oversight lost ground to expert mediation. The third shift is underway.

Historically, populations inside optimisation systems become variables — not citizens to be represented but inputs to be managed, not participants in governance but resources to be allocated towards system objectives.

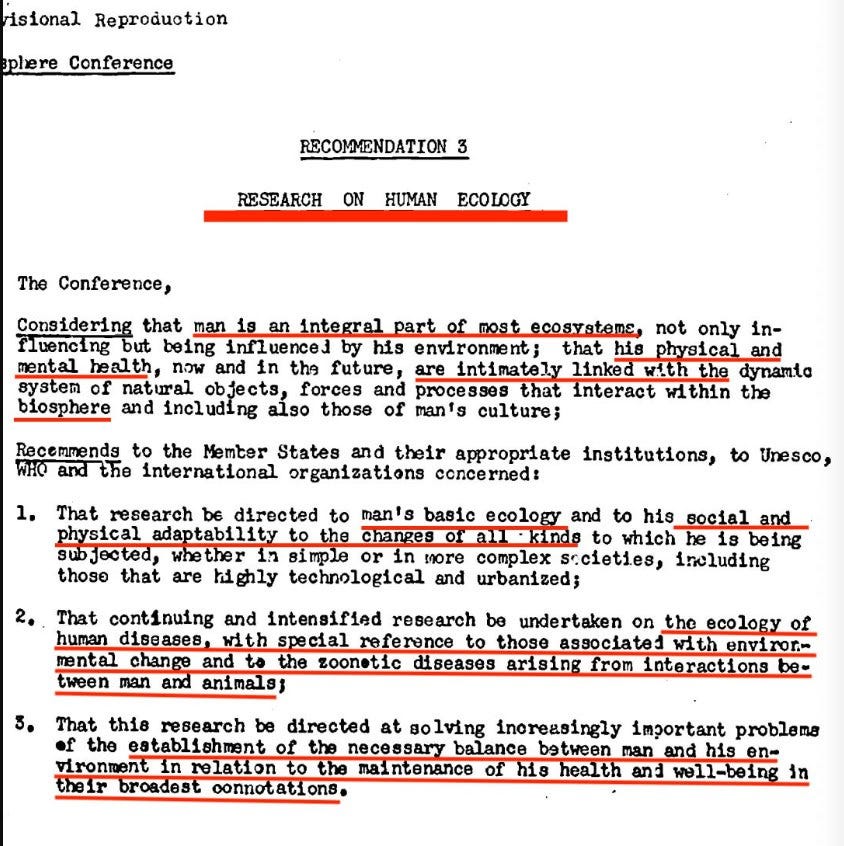

The conceptual groundwork was laid decades ago. The 1968 UNESCO Biosphere Conference declared35 that ‘man is an integral part of most ecosystems’ and called for research into human ‘social and physical adaptability to the changes of all kinds’ — framing populations as ecosystem components to be balanced rather than sovereigns to be represented. That ontological shift — from citizen to variable — is the precursor to computational governance. The methodology came from PPBS. The worldview came from systems ecology.

The system need not be hostile. It need not have malicious intent. It simply optimises towards its defined objectives — ‘national security and competitiveness’, ‘dominance’, ‘lethality’, ‘efficiency’. If human flourishing correlates with those objectives, humans flourish. If it does not, they are optimised like any other variable.

The constitutional void is not a gap to be filled by better policy. It is the space where the old operating system cannot execute. Democratic governance assumes the system responds to inputs from the governed. An optimisation system responds to its loss function. Those are different masters.

The practical question is not how to ‘govern AI’ as a discrete tool. It is how democratic oversight survives when AI becomes the substrate through which the state perceives, models, and acts. Genesis supplies the civilian cortex; GenAI.mil supplies the military cortex. The rest of the infrastructure determines whether the loop stays open to politics — or closes around optimisation.

The Genesis Mission and GenAI.mil are not tools the American state has adopted. They are the architecture of what the American state is becoming: a cybernetic organism that perceives through AI sensors, models through AI cognition, decides through AI agents, and increasingly acts through AI-directed physical systems.

The installation is underway. The twin brains are coming online. The constitutional order was designed for a different machine.

What runs on this new hardware is not yet determined. But whatever it is, it will not be what came before36.

Powerful article. Thank you for your complilation of the various but related important information and histories into one article. Disturbing. Alarming. Does not portend well for human freedom in the coming decades. Apparently by design. The theme presented is like science fiction, but factual, and happening now, behind the curtain, unbeknownst to the masses. Kudos for this fine piece of reporting.

Thank you for this. Deeply disturbing and alarming. The organized crime oligarch scumbags that run this country and their boy trump are moving fast t9 bring every single aspect of human life under billionaire control and cement it in place (while over-riding any possibility for the people to object or have a say), all for the domination of the few over the many. Criminal anti-life tyranical scum!!

But as long as their zionist boy trump tells americans that he "loves our country" and "look out, the evil monstrous scary muslims are coming to get you" and waves the flag then at least half of americans will keep stupidly cheering and supporting our oligarch jailors (because their zionist propagandists sell us the story that they're our protectors from "the enemy of our nation").

Classic elite manipulation and control (a.k.a 'the BOFEYMAN ENEMY PRINCIPLE) which the 1% haveen playing on the 99% for many centuries, but the majority just keep falling for it again and again and again..

How are we ever going to stop them when so many blindly believe that the predators are our protectors and on our side!?