A Constitutional Void

In 2025, three US policy shifts quietly transformed government into an adaptive governance engine. When considered together, they assemble a compliant American node within an emerging, automatic planetary adaptive management system.

This is not a warning about what’s coming. It’s documentation of what already happened while no one was watching.

The Treasury’s December 2024 AI report1 marks the mainstreaming of this approach: it documents growing use of AI and data-driven compliance across financial services and the risks that follow when complex systems automate enforcement at scale. BIS pilots, meanwhile, push compliance toward executable rules — policy-as-code — at the API boundary.

None of this requires a new statute to change the text of policy; it changes how policy is applied and updated in practice.

Executive Summary

In 2025, the US upgraded three systems: AI infrastructure, payment rails, and government-wide AI adoption. Separately, each looks like modernisation. Together, they create infrastructure where policy rules can be encoded directly into economic transactions and updated continuously through machine learning.

The Bank for International Settlements has built the global coordination layer. Projects like Mandala (cross-border compliance), Rosalind (programmable money), and Ellipse (adaptive learning) aren’t theoretical — they’re operational prototypes with published specifications.

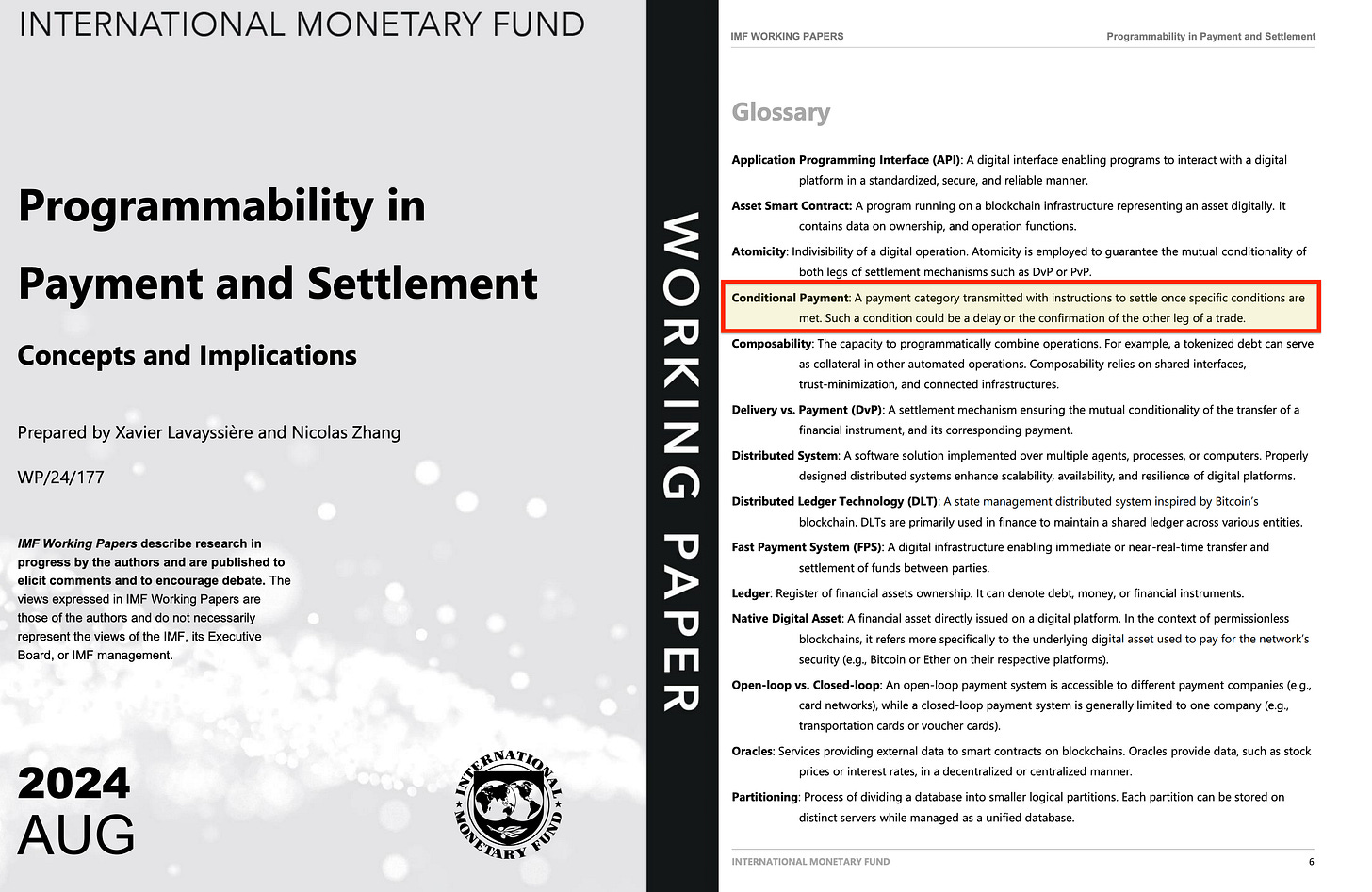

The system makes every transaction conditional. Conditions can be based on carbon emissions, ESG compliance, social justice metrics, or intergenerational equity calculations. The framework itself is neutral — it enforces whatever parameters are programmed. But enforcement is absolute: non-compliant transactions simply fail to clear, with no credible possibility of appeal.

This applies to individuals, enterprises, and governments. If you don’t meet the conditions, your ability to buy things simply comes to an end. The phrase ‘inclusive capitalism’ reveals the mechanism: inclusion demands participation. Participation means compliance with machine-readable rules that update faster than democratic institutions can debate.

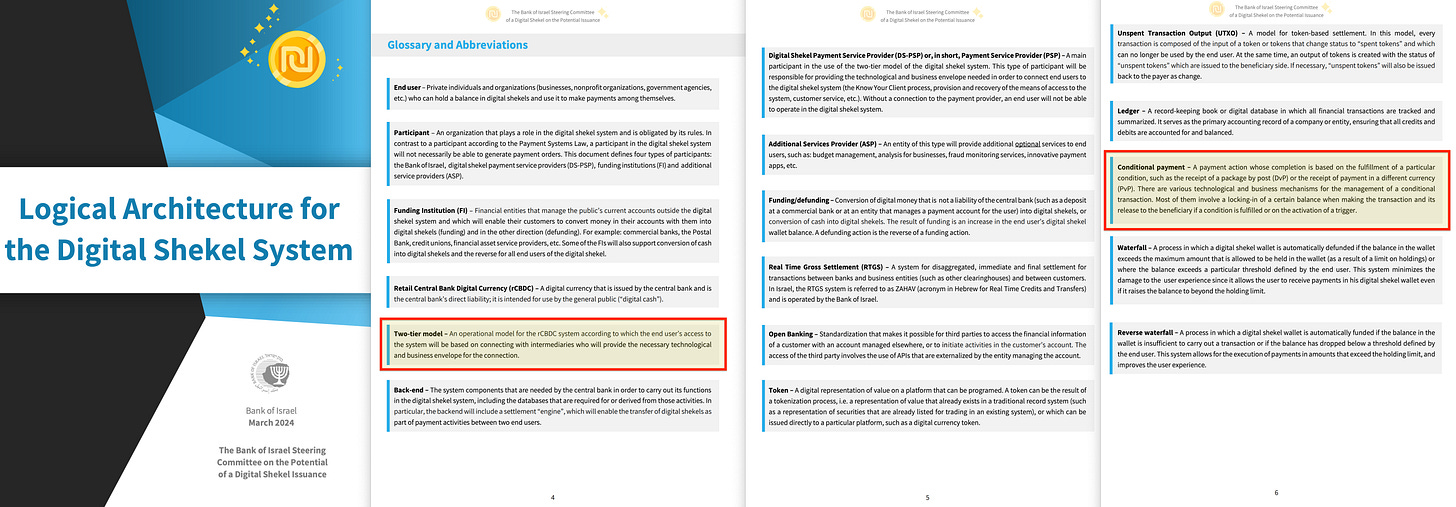

The locus of enforcement sits with central banks. Through Project Rosalind’s API architecture, they control the programmable money infrastructure at the retail level. They set the technical standards that determine who can participate. This makes central banks — not elected governments — the ultimate arbiters of economic access. And this applies to every transaction, no matter how minor, with whom, where or for what reason.

The constitutional problem is simple: when policy evolves at algorithmic speed inside payment systems, democratic oversight becomes structurally impossible. Policy-making has moved from legislatures to implementation layers — message schemas, APIs, compliance parameters controlled by unelected technocrats.

This article documents how the infrastructure was implemented in the United States, why oversight has failed, and why the stealth deployment proves the system cannot be trusted to operate with accountability.

Building the US Node

1) AI as Adaptive Infrastructure (Beyond Static Analysis)

Documented shift: Executive Order 141412 (January 14, 2025) positioned frontier compute as national AI infrastructure. It was later revoked and superseded by Executive Order 143183 (July 23, 2025), which fast-tracks permitting for large data-center projects — effectively hardening the compute substrate such systems run on.

Acceleration: EO 14318 reframed hyperscale data centers as critical national infrastructure and streamlined federal permitting. The text doesn’t say ‘adaptive policy’ but the practical effect is to backstop the capacity that adaptive governance would require.

Strategic integration: ‘America’s AI Action Plan’4 (July 23, 2025) organises federal action around three pillars — accelerating innovation, building AI infrastructure, and international leadership — and operationalises them via related executive actions5.

Why it matters: This reframing converts frontier compute from a mostly commercial asset to shared governance infrastructure. Treasury’s 2024 report underscores the obvious risk: as systems automate enforcement, optimisation can drift from original policy intent unless governance keeps pace

2) The Adaptive Execution Layer

Documented shift: Executive Order 142476 (March 28, 2025) mandates electronic payments — and explicitly states it ‘does not… establish a CBDC’. Treasury followed with implementation resources for agencies7.

Standards cutover: Fedwire’s ISO 20022 migration8 in mid-July 2025 put rich, structured data in the payment message itself — the precondition for rule application ‘at transaction time’.

Why it matters: Digitised payments aren’t just programmable; they are condition-ready. The Bank of Canada’s 2025 feasibility study9 finds that certain architectures ‘would support arbitrarily complex, full-featured programmable arrangements… well-suited for contingent payments’. Combine message richness (ISO 20022) with programmable arrangements and you have rails where enforcement logic can be encoded, executed, and updated rapidly.

3) Wiring the Adaptive Loop

Governance: OMB Memorandum M-25-2110 (April 3, 2025) resets AI governance across agencies — requiring policy, inventories, and controls — and is now reflected in agency compliance plans.

Procurement & experimentation: GSA’s USAi platform11 (August 14, 2025) gives agencies a secured environment to evaluate and adopt AI capabilities — standardising how models are piloted and governed.

Research substrate: NSF’s $100 million12 (July 29, 2025) for National AI Research Institutes strengthens the academic bedrock for operational AI, including areas relevant to policy modeling.

Why it matters: These moves harden the standards and practice layer for machine-assisted implementation — so AI doesn’t just analyse; it sits in the loop where rules are executed and managed.

4) DOGE: Adaptive Expenditure Targeting in Practice

Documented implementation: The Department of Government Efficiency (DOGE) was stood up in early 2025 with Elon Musk in a central role13. Its existence and ambitions are well-reported; details of claimed savings and internal mechanics remain contested14.

Why it matters: Whatever one thinks of DOGE’s outcomes, it serves as an at-scale testbed for AI-driven expenditure targeting inside the executive branch. Public reporting confirms its creation and aggressive mandate; litigation and investigative coverage underscore the legal and transparency tensions that arise when optimisation happens in the implementation layer

The Planetary Adaptive Management System

BIS projects don’t proclaim ‘machine-generated policy’, but they do operationalise policy-as-code and compliance-by-design:

Rosalind (BIS/BoE)15: An API prototype layer for retail CBDC ecosystems, showing how central bank ledgers could interface with private-sector services.

Mandala (BIS/MAS/RBA/BOK/BNM)16: Encodes jurisdiction-specific policy and regulatory requirements into a common protocol for cross-border use cases — compliance-by-design at the message/API level.

Ellipse (BIS suptech)17: A supervisory analytics prototype that integrates data and applies ML/NLP18 to spot risks — showing how the learning loop can sit in supervisory tooling even if the payment API itself remains deterministic.

IMF validation: IMF Working Paper WP/24/17719 systematises ‘programmability in payment and settlement’, clarifying how conditionality can be embedded in payments.

Bank of Israel20: Ongoing Digital Shekel design work details a two-tier model (‘positive money’) and technical considerations for advanced use cases; it doesn’t claim real-time ‘self-learning’ rules in the core payment API, but the architecture supports payment conditionality and iteration across releases.

Why it matters: The global picture is clear: encode rules at the API boundary; analyse outcomes in supervisory technology; iterate fast. That creates a de facto planetary learning environment without needing a treaty that says ‘global adaptive governance’.

Why Oversight Fails

Adaptive management generates a constitutional void through three mechanisms:

Implementation black hole: The most consequential changes can occur in implementation — message schemas, APIs, model updates — where formal public deliberation is weakest. GAO’s 2025 work21 shows AI use cases expanding rapidly while governance requirements proliferate and agencies race to keep up22.

Expertise barrier: When enforcement is encoded across payments messaging, APIs, and model-assisted workflows, review becomes a continuous technical exercise. BIS supervisory technology work23 and agency compliance plans show supervision moving into data+ML territory that traditional review processes strain to parse at speed24.

Success trap: Demonstrated efficiency (or headline savings) crowds out accountability debate. With DOGE, mainstream reporting highlights both sweeping claims and serious disputes — exactly the dynamic where ‘it works’ becomes the argument that ends the conversation.

The Planetary Learning Network

Data pooling (soft): Suptech prototypes like Ellipse show how supervisors can learn from pooled signals; cross-border compliance designs like Mandala require shared parameterisation.

Rule harmonisation (de facto): Standardised learning and rule-encoding parameters — message fields, API contracts, reference tables — become de facto policy. BIS/CPMI work on tokenisation25 and programmable settlement points in the same direction: executable conditions attached to payments and assets, subject to local law.²³

The real danger: Even without formal declarations about ‘learning policy’, the stack enables it: AI-backed supervision + programmable/conditional rails + fast-moving procurement and implementation guidance. That lets policy evolve in code and models between legislative cycles.

The Adaptive Governance Revolution

By late 2025, the engine was operational:

AI infrastructure treated as national infrastructure (EO 14318; EO 14141 revoked)

Payments digitised into a condition-ready execution layer (EO 14247 + ISO 20022)

Government-wide AI governance and a secured experimentation platform (M-25-21 + USAi)

BIS pilots and IMF analysis matured the global interface (Rosalind, Mandala, Ellipse; WP/24/177)

Together, these make adaptive, conditional finance technically feasible without omnibus legislation — because the policy-making surface moved from statutes to implementation.

In other words: this isn’t about payments or efficiency. It’s about who makes policy in the 21st century — and increasingly, it’s systems that implement, measure, and iterate faster than our institutions can debate.

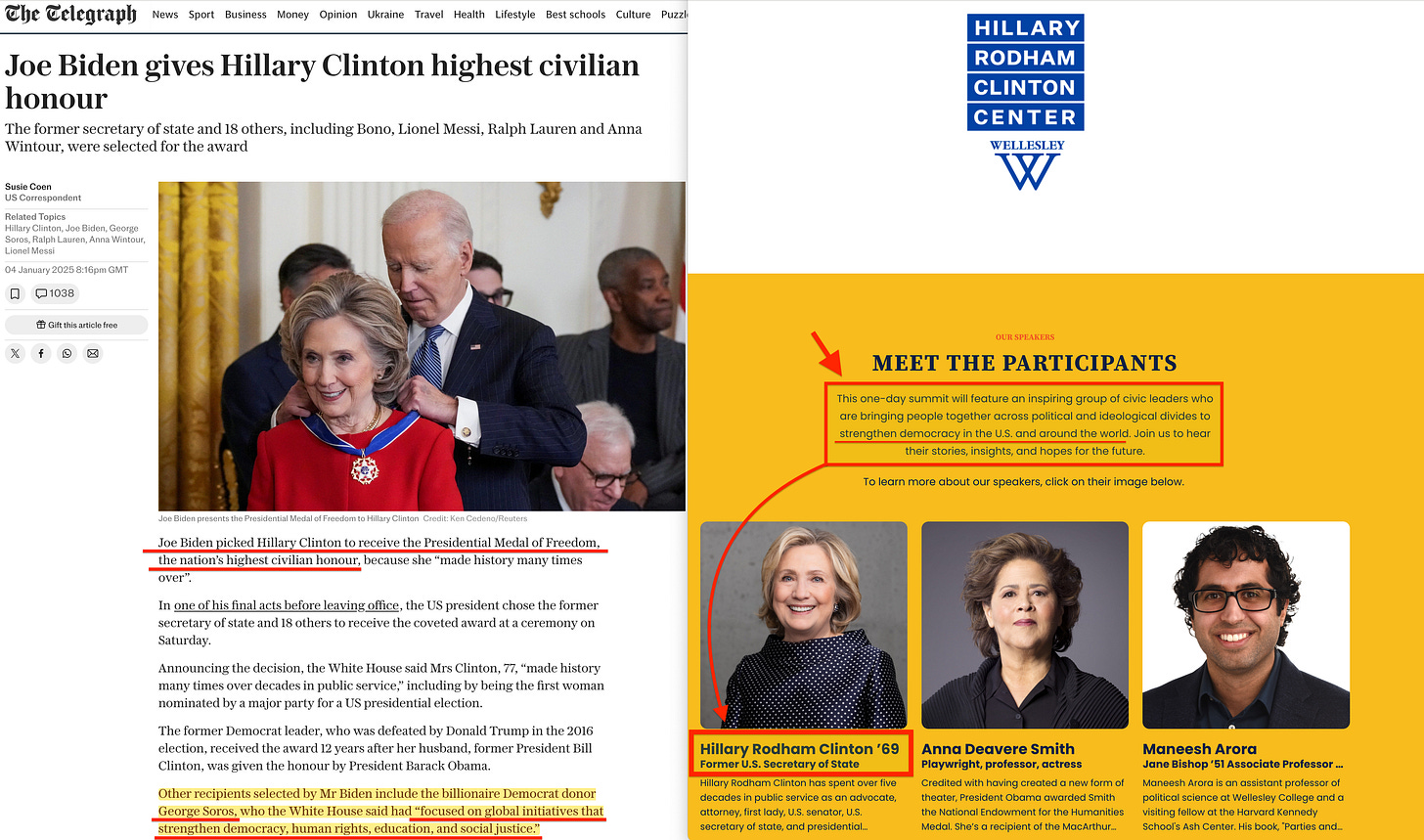

America is no exception — in fact, by providing the technology stack and complying fully with the BIS initiatives on especially harmonisation, they act as the leader in a number of avenues, at the cost of the same democracy a number of her politicians currently seek to ‘strengthen’2627.

Conclusion

The danger is not theoretical ‘conspiracy theory’ but structural inevitability. The US domestic stack — AI infrastructure treated as national priority, payment rails upgraded to ISO 20022’s structured messaging, government-wide AI governance frameworks — creates the technical substrate that BIS projects require to function globally:

When Mandala needs to encode and execute cross-border compliance rules in real-time, it relies on exactly the kind of programmable, data-rich payment infrastructure that Executive Order 14247 and the Fedwire migration delivered.

When Rosalind’s API layer needs frontier compute to process conditional logic at transaction speed, Executive Order 14318’s data center prioritisation provides it. The American contribution is not symbolic participation; it is the technological bedrock that makes planetary adaptive management technically feasible.

Without the US payment infrastructure accepting structured data and without American compute capacity supporting the algorithmic governance layer, the BIS architecture remains a prototype. With them, it becomes an operating system.

This is why CBDCs and digital identity are not merely features of this system — they are load-bearing pillars. A central bank digital currency provides the programmable unit of account (providing the pathway to conditional economics) through which policy can be embedded directly into money itself, bypassing the need for after-the-fact enforcement. Digital identity creates the authenticated, parameterised nodes in the network — ensuring that every economic actor has verifiable credentials that can be checked against rule sets in real-time.

Together, they transform money from a neutral medium of exchange into a conditional access token, and economic identity from a broad social status into a machine-readable permission structure. The ‘great reset’ language28 — whatever one thinks of its rhetorical deployment — accurately captures the scale of transformation: this is not reform of the existing system but replacement of its fundamental operating logic. Markets coordinated by price signals and human judgment give way to markets steered by algorithmic rule enforcement and continuous optimisation against policy targets.

The result is a world model where governance operates through the unit of account itself. Policy is no longer debated, legislated, and then enforced; it is compiled into protocol, executed at the API boundary, and adapted through feedback loops that operate faster than democratic deliberation. When your ability to transact depends on algorithmic clearance against rules that update continuously, political economy becomes cybernetic management.

This, in itself, does not require malicious intent or shadowy coordination — it emerges naturally from the technical capabilities the infrastructure creates and the institutional incentives to use them. The BIS provides the global standards and coordination layer; participating nations like the United States provide the compute, the data infrastructure, and the implementation. Together, they construct what ‘From Rosalind to Mandala‘ identified: compliant national nodes within an emerging planetary adaptive management system, where the most consequential governance decisions happen not in legislatures but in the implementation details of payment protocols, API specifications, and algorithmic compliance engines.

However, though this transition theoretically could be organic, the alignment with ‘Inclusive Capitalism’ makes coincidence implausible.

When the Council for Inclusive Capitalism29 explicitly advocates for financial systems that enforce social and environmental outcomes, and the BIS simultaneously builds the technical infrastructure to make transactions conditional on ESG compliance, we are observing not parallel evolution but coordinated implementation.

Project Gaia30 automates corporate climate disclosure verification; Project Symbiosis31 tracks supply-chain emissions; Mandala32 embeds policy compliance — including presumably ESG criteria — directly into cross-border payment protocols. The ideological framework names the goal: align capitalism with stakeholder values rather than shareholder returns.

The technical architecture provides the enforcement mechanism: programmable money that only clears when algorithmic checks confirm compliance with those values. This is not reform or evolution — it is replacement of market coordination through price signals with algorithmic steering toward predetermined policy targets. Whether achieved through explicit conspiracy or through aligned institutional incentives among central banks, global governance bodies, and major financial institutions that share both ideology and technical capacity, the outcome is identical: economic participation becomes conditional upon compliance with machine-readable rules that encode ‘inclusive’ priorities directly into the unit of account itself.

The infrastructure does not enable this transformation — it is this transformation, dressed in the language of modernisation and efficiency.

The Silence Is the Evidence

Some will argue that adaptive planetary management is not inherently problematic — that it depends on how it’s implemented, on the safeguards built in, on democratic oversight. This argument fails immediately.

If this system were intended to operate with democratic legitimacy, its architects would have announced it, debated it, and sought public mandate for a transformation this fundamental. They did not.

The fact that this analysis represents the only place on the internet where these connections are made explicit is not a failure of public attention — it is evidence of deliberate opacity.

When you build a system in silence, you reveal your assessment of whether the public would consent if asked. The stealth deployment is the confession. A system constructed without democratic input will not suddenly operate with democratic accountability once complete.

The constitutional void was never an accident of oversight — it was the precondition for implementation.

Profoundly important. Thank you!! The freeloading billionaire predators and their influencers have us distracted and fighting each other over their idiotic culture wars while they contruct the digital prison walls all around us to ensure the domination of the few over the many

...it occurs to me that the entirety of your writing is revelation of method.