Velocity

Two weeks ago, Skynet and The Predictive State mapped a pattern: infrastructure being built in the background while policy was debated in public. Since then announcements became procurement, executive orders became contracts, ‘future capability’ became operational. The gap between what’s argued about and what’s quietly built — that’s where governance actually happens.

The pattern is no longer emerging. It’s here.

Find me on Telegram: https://t.me/escapekey

Find me on Ghost: https://the-price-of-freedom-is-eternal-vigilance.ghost.io

Bitcoin 33ZTTSBND1Pv3YCFUk2NpkCEQmNFopxj5C

Ethereum 0x1fe599E8b580bab6DDD9Fa502CcE3330d033c63c

Executive Summary

The title, Velocity, points to the decisive factor: pace. While infrastructure deploys at the speed of procurement (daily contract awards, weekly MOUs, monthly platform rollouts), oversight operates at the speed of legislation and litigation (multi-year cycles, procedural delays, appellate review). The gap between the two is where governance currently resides. Automated anticipatory governance is what emerges when sensing becomes comprehensive and sensitivity becomes programmable.

Existing popular analytical frameworks capture parts of this picture, but they all miss the central mechanism. While mainstream governance discourse assumes policy leads technology (suggesting that guardrails can be installed before the highway opens), this essay documents technology creating policy, with infrastructure operational before legislatures review it.

GAO-style oversight reform treats the system as broken and fixable through better hearings, reports, and audits. This essay suggests the system isn’t malfunctioning; it’s optimised for a different purpose. Oversight isn’t something to fix — it's something to learn from: friction that trains the model.

Zuboff’s surveillance capitalism1 explains data extraction as a business model. This essay connects that logic to statecraft: procurement contracts, IL5 accreditation, operator cells. It’s Zuboff’s thesis with a budget and security clearance. Deregulatory framing celebrates streamlined procurement as cutting red tape. This essay’s insight is that streamlined process is the new governance — accreditation regimes, vendor lists, and platform permissions don’t precede power, they constitute it.

Scott’s Seeing Like a State2 comes closer — his analysis of how states make populations readable in order to govern them anticipated much of this architecture. But Scott’s ‘high modernism’ depended on simplifying society — wiping out local knowledge and squeezing disorganised reality into neat bureaucratic boxes. The new architecture claims a different trick: to preserve complexity while still governing it, to see without simplifying, to act on the particular rather than the general. Whether this is true or merely a more sophisticated simplification remains unclear. What is however clear is that Scott’s ‘seeing’ has become sensing, and sensing has become continuous.

Harari’s dataism3 comes closest to the underlying ideology. The belief that information flow is the supreme good, that societies are data-processing systems, that algorithms will inevitably outperform human judgment — this is the philosophy the architecture runs on. But dataism remains a theory of history, a claim about where things are heading. This essay shows dataism not as prediction but as deployment: the contracts signed, the platforms accredited, the loops closing. The question is no longer whether to trust the algorithm over human intuition. The question is which humans set the parameters before the algorithm runs.

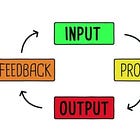

What’s missing in each framework is the loop itself — the perception-prediction-actuation-learning cycle that connects sensing to intervention. The loop explains what we’re seeing: decisions speed up because data goes stale fast; teams are staffed for short, rapid deployment cycles; interoperability makes the model easy to copy across countries; finance and governance merge as APIs become the ‘control levers’. Other frameworks treat these as separate political or economic stories. The loop reveals them as the same underlying process executing in real time.

The architecture has four layers: perception (global surveillance, satellite tracking, real-time data streams, cross-domain sensing), prediction (Digital Twins and AI platforms for modelling and forward projection, identity resolution across datasets), actuation (clearance granted or denied at chokepoints — transactions, shipments, applications, access), and learning (outcomes refine models, exceptions become categories, parameters tighten). Connected through shared infrastructure — cloud platforms, accreditation regimes, procurement frameworks — these layers form a closed loop. The fourth layer is what makes it adaptive — and what absorbs human judgment into the loop. Enforcement doesn’t feel like a ban — it’s experienced as hassle: delays, failed transfers, queues, and extra checks.

The pace is the decisive factor. In fourteen days between December 9 and December 23, 2025, GenAI.mil launched and expanded, Genesis Mission moved from executive order to $320 million investment to 24 vendor partnerships, federal-state preemption battles began, and two major geopolitical settlements advanced through a two-person team working outside normal diplomatic channels. Infrastructure deploys daily; oversight runs on legislative and judicial timescales.

The asymmetry is structural.

Three mechanisms constitute power in this architecture: the global ‘black box’ modelling (typically delivered as an ‘ethical imperative’), the financial settlement (the monetary substrate), and the most overlooked of all — credentials (defining what you’re even allowed to do). Accreditation is the most powerful chokepoint4 — but without the modelling layer to define compliance, and the financial layer to enforce it, the gate has nothing to guard.

Zuboff got the model, Scott got the seeing, Harari got the data, central banking critics got the finance — but credentials generally fall through the gap, and few see all of these as connected.

The endpoint isn’t the removal of human judgment — it’s absorption. Humans handle exceptions, but those exceptions become categories, and categories become protocols. The system learns from what humans usually approve: oversight becomes feedback, feedback becomes training, training becomes policy. Once the settings are fixed, the system decides. At that point, humans no longer decide; they’re removed from the decision loop.

A Note on Method

This essay documents convergence, not conspiracy: systems built for compatibility behave as if coordinated, whether or not anyone planned it. Evidence is contracts, partnerships, deployment timelines — not leaked memos.

‘Governance’ here means the power to allocate rights, access, and constraints at scale — through administrative systems, not just laws. If a platform decides what can be asked or who can transact, that’s governance. The question is who it answers to.

This is a diagnosis, not a prediction. While friction is real, the question is what governance looks like while this is still being built.

The Single Interface

GenAI.mil launched on December 9 with Google’s Gemini for Government5. Within two weeks, the War Department6 — the Trump administration’s framing for the Department of Defense (DoD) — announced expansion to include xAI7:

GenAI.mil, recently launched as the War Department’s bespoke AI platform, will soon be expanded with the addition of xAI for Government’s suite of frontier‑grade capabilities... Users will also gain access to real‑time global insights from the X platform, providing War Department personnel with a decisive information advantage.

Three million military and civilian personnel are being routed toward a single interface now contracted to integrate Grok-class models with X’s real-time stream — as enterprise access expands and mandated adoption language spreads through training guidance.

In a video announcement on December 9, Defense Secretary Hegseth directed8:

I expect every member of the department to login, learn it, and incorporate it into your workflows immediately. AI should be in your battle rhythm every single day. It should be your teammate.

The platform consolidates what were previously fragmented approaches to AI — NIPRGPT9, CamoGPT10, AskSage11 — into a single interface. All tools are certified for Controlled Unclassified Information12 (CUI) and Impact Level 513 (IL5): secure enough for operational use, opaque by default to the public14.

IL5 is the accreditation moat. It requires physical separation of servers, US citizenship for all personnel with potential access, and high-frequency auditing — hundreds of millions in infrastructure investment and years of compliance labor. Requiring IL5 for GenAI.mil narrows the field to a few big cloud firms and their partners. Once an agency’s data sits in an IL5-approved environment, moving it out becomes costly. Accreditation thus becomes vendor lock-in.

Three procurement instruments make the lock-in irreversible: OTA (Other Transaction Authority)15 bypasses traditional competitive bidding for ‘prototypes’, allowing hand-picked vendors without multi-year procurement cycles. ATO (Authority to Operate)16 is the security sign-off — once issued, migration becomes an unacceptable security risk. CRADA (Cooperative Research & Development Agreement)17 blurs intellectual property lines between agencies and vendors, so the model itself becomes a national security asset that can’t be switched.

Pentagon CTO Emil Michael confirmed additional models — Anthropic’s Claude, OpenAI’s ChatGPT — will federate onto the platform ‘in days or weeks’18. The multi-vendor strategy consolidates control at the platform layer while diversifying the models beneath it.

The platform determines what questions can be asked, what data can be accessed, what answers surface. The models compete beneath that constraint — three million users accessing AI through one interface, governed by one set of permissions, shaped by one accreditation regime, regardless of which model generates the response. Model neutrality is a myth: the platform layer determines which model sees which data, which agentic tools can act. The interface is the power, not the models.

The feedback advantage compounds this. Three million users routed through one interface generates a proprietary dataset of human-in-the-loop decisions — which outputs get accepted, which get rejected, which trigger escalation. This data tunes the models, creating a performance gap no newcomer can bridge. First movers get training signal at government scale.

Hallucination risk often accelerates this architecture rather than slowing it. Much like every ‘Digital Twin’ misprediction is transformed into a call for more surveillance, every failure mode becomes demand for constrained interfaces, approved tools, and auditable chains of custody. This creates a ratchet: every failure justifies expanded autonomous authority to prevent the next one.

The human becomes the bottleneck. When system velocity exceeds human latency, the human is architecturally excluded — not by decision, but by math. More infrastructure is the only thing that can fix infrastructure.

Genesis Acceleration

The Genesis Mission moved from executive order19 (November 24) to investment20 ($320 million, December 10) to vendor consolidation (24 MOUs, December 18) in less than a month21.

The December 18 announcement formalised partnerships with NVIDIA, Google DeepMind, Accenture Federal Services, IBM, and twenty additional organisations.

Google DeepMind’s commitment is immediate22:

Google DeepMind will provide an accelerated access program for scientists at all 17 DOE National Laboratories to our frontier AI models and agentic tools, starting today with AI co-scientist on Google Cloud.

The Genesis EO’s Section (e) — directing review of ‘robotic laboratories and production facilities with the ability to engage in AI-directed experimentation and manufacturing’ — is not waiting for the 240-day review timeline23. The infrastructure is being commissioned in parallel: Secretary of Energy Chris Wright commissioned the Anaerobic Microbial Phenotyping Platform at Pacific Northwest National Laboratory within weeks of the announcement — ’the world’s largest autonomous-capable science system’24.

Genesis extends beyond ‘civilian brain’ into actuation: AI systems generate hypotheses, robotic laboratories run experiments, results refine the models, the cycle repeats. The ‘closed-loop AI experimentation platform’ connecting supercomputers, AI systems, and robotic laboratories is funded, partnered, and deploying.

The same loop structure — perception, modelling, action, feedback — operates in GenAI.mil (intelligence → operations → assessment) and in the predictive enforcement systems described in the previous essays (data fusion → risk modelling → intervention → outcome). Genesis extends that architecture into physical science and manufacturing.

Governance shift from human-in-the-loop (the human decides) to human-on-the-loop (the human audits, handles exceptions, reviews outputs). The system proposes; the human disposes — but the system sets the menu and the tempo. Over time, that becomes the decision.

The transition has phases: model proposes, human approves; model proposes, human approves rarely; model acts, human handles exceptions; exceptions become categories with escalation protocols; protocols get optimised based on which escalations humans routinely affirm. The endpoint isn’t removal of humans — it’s absorption of their judgments. Oversight becomes feedback; feedback becomes training; training becomes policy.

The Transatlantic Mirror

The same week Genesis consolidated its vendor partnerships, Google DeepMind announced its first ‘automated research lab’ in the UK25:

The AI company will open the lab, which will use AI and robotics to run experiments, in the U.K. next year. It will focus on developing new superconductor materials... British scientists will gain ‘priority access’ to some of the world’s most advanced AI tools.

The UK government simultaneously announced the potential for ‘Gemini for Government’ to ‘cut bureaucracy, automate routine tasks, and free up civil servants’26.

Same vendor, same capability, same justification language — deployed across jurisdictions in the same news cycle.

The original essays described Phase 3 — international extension — as something still ahead, but the transatlantic deployment is happening in parallel: infrastructure standardising across sovereign boundaries faster than any single legislature can review it.

Interoperability is the quiet treaty: once the stack matches, policy can travel faster than politics. Coordination becomes default rather than deliberated.

The pattern holds across the Atlantic. The EU AI Act27 functions as centralised preemption — a Single Surface across 27 nations that strikes down member-state laws as ‘obstacles to the Single Market’. The EU AI Office28 becomes the continental operator cell, translating US-NATO stack requirements into implementing acts that bypass national legislatures.

Sovereignty is being deprecated.

Standards of Approval

This isn’t only platform rollout. It’s the approval regime that makes platforms portable.

NATO’s AI Strategy29 already frames ‘Responsible Use’ as something that must be operationalised through verification, validation, and lifecycle testing30 — explicitly referencing NATO and national certification procedures as the enforcement mechanism for reliability and traceability31.

Since 2023, NATO’s transformation apparatus has moved from principles to implementation: the Alliance has been developing a Responsible AI Certification Standard (RAICS)32 under the Data and Artificial Intelligence Review Board (DARB)33.

This is the missing link between ‘AI adoption’ and ‘AI governance’: once an alliance-level certification regime exists, interoperability stops being a diplomatic achievement and becomes a technical default.

When a nation adopts the RAICS certification regime, it signs a technical treaty — not ratified by a legislature but executed as a software licensing agreement. Interoperability becomes alignment, and nations that drift from consensus don’t face sanctions first; they face throttled API access and administrative friction.

The logic is explicit in accreditation industry language: mutual recognition arrangements ensure ‘acceptance of accredited services in many markets based on one accreditation’34 — national regulatory capacity bypassed by design. The structure isn’t new. Leonard Woolf’s International Government35 (1916) proposed the same architecture: international organisations (NGOs in contemporary terminology) mediating between nations, governance layered above the state. The 2025 version substitutes certification regimes for NGOs.

Carnegie’s Hague process promised arbitrated peace. What it built was a war clearinghouse — infrastructure to manage conflict as a system output. The right to determine what counts as rightful use of force, versus illegal invasion. The Board of Peace in Gaza doesn’t create peace; it creates conditional access to peace. When conditions aren’t met, the inverse clears.

Sense at Scale

The sensing side of the loop is being funded at the same tempo as the modelling side.

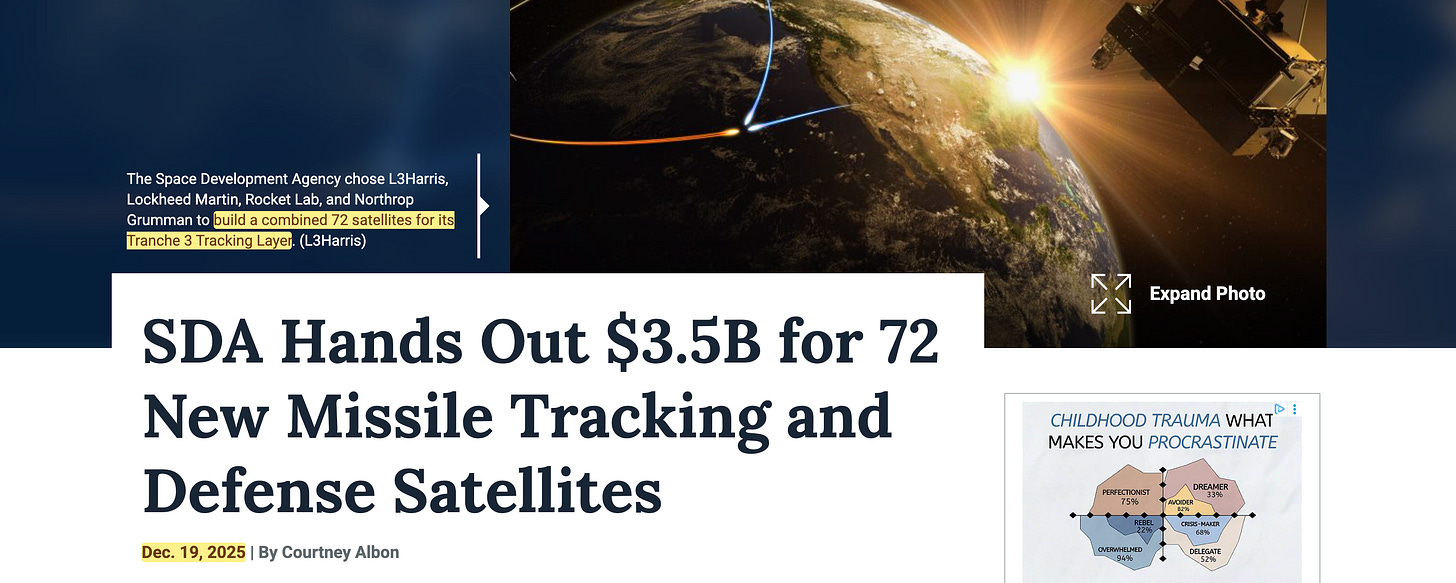

Space Development Agency contract awards for Tranche 3 Tracking Layer36 — structured to deliver and operate 18 satellites per awardee — show the state buying higher ‘frame rate’ perception as an operational baseline37. This is procurement as perception: the inputs to the modelling layer are being provisioned in parallel with the compute, the platforms, and the actuation mechanisms.

The loop requires all four layers to close. Sense at scale makes the rest legible.

The Operator Cell

By ‘operator cell’ I mean a small, cross-theatre interface that can move faster than formal institutions — and translate settlement terms directly into implementable architectures. And two names appear across both major theater negotiations: Steve Witkoff and Jared Kushner.

Ukraine

On December 2, Witkoff and Kushner met with Putin at the Kremlin, accompanied by Kremlin envoy Kirill Dmitriev and foreign policy adviser Yuri Ushakov. The meeting lasted approximately five hours38. They presented documents outlining a phased settlement plan. Ushakov confirmed to reporters afterward: ‘A compromise hasn’t been found yet, but some American proposals seem more or less acceptable’39.

On December 20-21, Dmitriev traveled to Miami for follow-up negotiations with the same operator cell. Witkoff described the talks as ‘productive and constructive’. The Kremlin confirmed Dmitriev would brief Putin upon returning to Moscow40. By December 23, Zelenskiy confirmed that multiple draft documents had been prepared following the Miami talks41 — security guarantees and recovery framework drafts. Architecture becoming paperwork.

Gaza

On December 20, Witkoff convened representatives from the US, Egypt, Qatar, and Turkey in Miami to review Phase 1 implementation and advance Phase 2 preparations42. The joint statement:

In our discussions regarding phase two, we emphasized enabling a governing body in Gaza under a unified Gazan authority to protect civilians and maintain public order... We expressed our support for the near-term establishment and operationalization of the Board of Peace as a transitional administration43.

Turkey’s Foreign Minister Fidan confirmed44:

Turkey expects the second phase of a Gaza ceasefire deal to begin early in 2026... the priority was for Gaza’s governance to be taken over by a Palestinian-led group.

The Pattern

Ukraine (Witkoff/Kushner; Dmitriev → Putin)

Phased plan, territorial conditions, security guaranteesGaza (Witkoff/Kushner; Turkey/Egypt/Qatar)

Board of Peace, ISF, technocratic committee

Both settlements feature phased conditionality (compliance unlocks subsequent phases), technocratic administration (governance by appointed committee), international oversight bodies, and compressed timelines that foreclose extended deliberation.

Witkoff and Kushner function as the interface between settlement theater and implementation stack. The operator cell connects:

Capital: Kushner’s investment relationships (Saudi, UAE, Israeli tech sector)

Oversight architecture: Board of Peace, security guarantee frameworks

Certification logic: conditions that unlock phases, compliance that enables participation

Vendor/contract ecosystem: the same commercial infrastructure deploying across federal agencies

When Robert McNamara moved from Defense Secretary to World Bank president45, he carried PPBS methodology into conditional lending — countries that wanted loans had to restructure their planning processes, and adhere to conditions to receive funding. The operator cell performs a similar function at compressed timescales: settlement terms negotiated in one theater embed conditions that propagate through implementation infrastructure.

Two major geopolitical settlements — Europe’s largest land war since 1945 and a restructuring of Palestinian governance — are being processed through a two-person interface operating outside traditional diplomatic channels. The outputs resemble implementation specifications (phases, conditions, auditing, oversight bodies) rather than political settlements.

The Board of Peace

The Gaza Phase 2 architecture deserves specific attention. The settlement structure is described (in reporting and leaked documents) as including:

Board of Peace: An international transitional body headed by President Trump, overseeing civilian, security, and reconstruction tracks46

Palestinian Technocratic Committee: An appointed body of ‘qualified Palestinians and international experts’ responsible for day-to-day administration47

International Stabilization Force (ISF): A multinational security force with proposed contributions from Italy, Egypt, Indonesia, Azerbaijan, and Turkey48

Demilitarisation conditions: Phased disarmament tied to governance transfer

This is conditional sovereignty in explicit form. Governance is not transferred through election or self-determination but through certification — compliance with conditions set by external actors, verified by an international oversight board, enforced through an international security presence.

Structurally, it reads less like a handoff than a recurring audit loop: the UN Security Council resolution requires periodic reporting; the Board of Peace oversees ‘implementation steps’ with built-in review points; the ISF mandate appears designed to require extension decisions49. The point is to substitute certification for legitimacy — governance becomes what clears.

Every control mechanism comes with an ethical wrapper: reconstruction is ‘humanitarian’, surveillance is ‘public health’, preemption is ‘preventing fragmentation’, e-wallets are ‘financial inclusion’, the Board is ‘peace’. The wrapper makes contestation feel immoral — you’re not opposing a control system, you’re opposing equity, safety, progress. The legitimation layer is how the architecture installs without resistance.

If local legitimacy aligns, the architecture looks like reconstruction. If it doesn’t, it looks like an audit regime backed by an ISF. Same structure; different moral interpretation.

The architecture is being negotiated now: Turkey expects Phase 2 to begin ‘early 2026’, the ISF deployment timeline is being discussed at US Central Command conferences in Doha50, and the technocratic committee composition is being vetted by Israel — which controls NGO registration and determines which organisations can operate in Gaza at all. The stress-test is already visible: on December 23, Israel's defense minister stated that IDF forces will remain in Gaza ‘indefinitely’ — a direct contradiction to the phased withdrawal that the Board/ISF architecture requires51.

The finance rail is already moving. The World Bank established the Gaza Reconstruction and Development Fund as a Financial Intermediary Fund in December52, with resources to be transferred to ‘an internationally recognized legal entity authorized to facilitate, finance, and oversee recovery, reconstruction, and development activities in Gaza’53. That entity is the Board of Peace — the World Bank document doesn’t name it, but UNSC 2803 gives the Board exactly that mandate. The connection is structural, not announced.

Meanwhile, the digital access layer is scaling: in November, financial service providers restarted creating e-wallets, and WFP distributed e-wallet transfers to over 245,000 people in October — more than triple September’s volume54. Banks are reopening accounts, reactivating frozen accounts, and issuing digital wallets. The wallet becomes the certification layer at the individual level: access to reconstruction funds flows through digital identity verification, not citizenship.

The convergence: Board of Peace (authority) + World Bank FIF (finance) + e-wallet scaling (digital access) + UNGM vendor registration (procurement accreditation). Four rails, one architecture.

The system doesn’t decide to withhold aid; the aid simply fails to clear if conditions aren’t met. Governance by default. No human technically denies the funds — the transaction just doesn’t clear.

If this architecture stabilises in Gaza, it imports a familiar template from Ukraine’s reconstruction — and it won’t stop there. The same clearance logic can migrate into domestic ‘benefit modernisation’: same rails, different populations.

In a December interview, Trump confirmed the composition: ‘kings, presidents, prime ministers’ forming ‘one of the most legendary boards ever’55. The original design was technocratic — diplomats, envoys, administrators. The upgrade to heads of state is being framed as spontaneous demand: ‘everybody wants to be on it’. Governance architecture marketed as exclusive membership.

Not Plato’s philosopher kings. Just kings — investor kings, donor kings, normalisation-deal kings. Qatar’s Emir, UAE’s Mohammed bin Zayed, potentially Saudi’s MBS. The ones with capital, leverage, and regional interest in what Gaza becomes. The Kushner network that runs sovereign wealth relationships now runs ‘peace’ architecture. The Sacks portfolio that benefits from AI deployment now sets AI policy56.

Gaza doesn’t get a board seat. Gaza becomes a tier — one level in a stratified system where the Board sits above, setting parameters, and the population sits below, clearing them or not. The higher the tier, the more power. The lower the tier, the more the system feels like weather: something that happens to you, but you don’t impact.

The question of who governs Gaza is being answered through architecture, not politics — though the people of Gaza will surely be consulted on which flowers to plant in the decorative town square beds in yet another instance of participation theater.

If the architecture succeeds, it becomes the template — the way the World Commission on Dams57 formalised stakeholder governance for a generation of development projects. The WCD proved you could manage a global resource by creating a governance layer above the nation-state — accreditation as the condition for World Bank funding. The Board of Peace applies the same logic to territorial conflict and population management. What changed isn’t the structure. It’s the tempo (real-time vs. multi-year), the actuator (e-wallets vs. reports), and the membership (investor-sovereigns vs. stakeholder theater). The cockpit was built more than twenty-five years ago. The 2025 version plugs in AI and the financial rails to make it autonomous.

The Preemption Collision

On December 11, President Trump signed an executive order establishing an AI Litigation Task Force to challenge state AI laws58:

Within 30 days of the date of this order, the Attorney General shall establish an AI Litigation Task Force whose sole responsibility shall be to challenge State AI laws inconsistent with the policy set forth in section 2 of this order.

The order directs the Commerce Department to identify ‘onerous AI laws’ within 90 days and instructs agencies to consider withholding federal broadband funding from non-compliant states. Most state AI laws are ‘AI ethics’ laws — transparency requirements, bias audits, disclosure mandates. Preempting them centralises control over the ethical substrate: who sets the parameters that shape what AI systems can and cannot do; what information they can and cannot divulge, and how to frame it.

One week later, New York Governor Kathy Hochul signed the RAISE Act anyway59.

The law requires large AI developers to publish safety protocols, report incidents within 72 hours, and creates an oversight office within the Department of Financial Services. Violations carry fines up to $1 million (first offense) and $3 million (subsequent). State Senator Andrew Gounardes60:

Big tech oligarchs think it’s fine to put their profits ahead of our safety — we disagree... And we defeated Trump’s — and his donors’ — attempt to stop RAISE through executive action greenlighting a Wild West for AI.

The collision is now explicit:

Federal position: One ethics framework, not fifty. State regulation creates ‘fragmentation’ that undermines innovation.

State position: Federal inaction requires state leadership. Two major economies (California, New York) setting de facto standards.

Fragmentation is treated as a technical fault to be patched — via preemption, unified standards, and compliance-as-API.

The AI Litigation Task Force has 30 days to form, the Commerce Department has 90 days to identify targets, legal challenges will take years — meanwhile, infrastructure deployment continues daily.

The structural point from the original essays holds: the system iterates faster than oversight mechanisms can respond. By the time courts rule on state preemption, the platform layer will be operational across federal agencies, defense installations, and allied governments.

The asymmetry is structural. States operate on the legislative track: years of debate, then statute, then litigation, then compliance. The federal government operates on the procurement track: contract, accreditation, deployment, fact. By the time state litigation reaches the Supreme Court, the stack will already be the industry standard. The states will be left regulating a ghost — a version of the technology that no longer exists in operational reality. Preemption as a service.

The legal battle is real — and, in operational terms, a rearguard action.

Quiet Domestic Extension

While the legal fight unfolds, the stack extends into civilian administration.

Health: HHS issued a Request for Information in December seeking comment on AI in clinical care61 — not research applications, but deployment: reimbursement policy, regulatory pathways, adoption barriers, workflow integration62. The RFI explicitly asks how to accelerate AI deployment across the healthcare system. This means coverage decisions, billing codes, eligibility determinations, compliance requirements. The reimbursement rail is the health system’s equivalent of the payment rail: what clears, what doesn’t.

Workforce: OPM announced ‘US Tech Force’ in December63 — a surge-hiring initiative targeting 1,000 technologists on two-year terms, placed directly into agencies to accelerate AI and data modernisation64. This is the human complement to platform rollout: while procurement closes the infrastructure bottleneck, staffing closes the implementation bottleneck.

Personnel: On December 10, OPM and OMB directed agencies to pause fragmented HR modernisation efforts and align to a governmentwide ‘Core HCM’ approach65. This is the GenAI.mil logic applied to administration: a single optimisation surface for the federal workforce itself — where identity, permissions, and workflow become legible to a shared platform rather than a thousand bespoke systems.

The pattern: infrastructure procurement creates capability, workforce surge creates operators, regulatory harmonisation creates legal cover, administrative consolidation creates the internal surface.

Each layer reinforces the others.

Palantir Expansion

Meanwhile, the data integration layer continues to expand:

$10 billion Army Enterprise Agreement (August 2025): Consolidates 75 contracts into a single framework for Palantir’s software and data services across the Army and other DoD components66.

$448 million Navy contract: Foundry and AIP for shipbuilding operations and supply chain67.

USCIS ‘VOWS’ platform (December 2025): A new contract to implement ‘vetting of wedding-based schemes’ for immigration fraud detection68.

The USCIS contract is small ($100,000 for Phase 0) but structurally significant. It extends Palantir’s data integration capabilities from defense and intelligence into immigration services — the administrative layer that determines who can enter, settle, and naturalise.

Combined with the previously documented IRS audit selection models, SSA benefits processing automation, and DHS’s 105 AI use cases, the pattern is consistent: agency by agency, function by function, the state’s perception and decision-making infrastructure is being consolidated onto platforms that enable cross-domain data fusion.

Palantir is the leading candidate for the fusion layer: the tooling class that resolves identity across datasets and turns model outputs into executable workflows. Whether Palantir or a successor, this layer is structurally necessary for single-surface governance. It connects the AI platforms (GenAI.mil, agency-specific tools) to the administrative systems that touch citizens (benefits, taxes, immigration, law enforcement). When the original essays described cross-domain fusion as a structural consequence of shared infrastructure, this is what it looks like in procurement.

The Actuation Rail

The architecture described in these essays — AI platforms for perception and modelling, data fusion for identity resolution, settlement frameworks for governance — requires an actuation layer. Decisions must translate into consequences.

Venezuela provides a current example of how clearance-based enforcement operates at scale.

Recent reporting documents US interdiction of Venezuelan oil shipments: slowed loadings69, U-turns at sea, and PDVSA disruption following cyberattack. The mechanism is throughput control — the ability to determine which cargoes clear, which routes remain viable, which counterparties face secondary sanctions risk.

This is the same structural logic applied to trade flows that the payment rail discussions apply to transactions: conditionality embedded in infrastructure rather than debated in legislatures. Compliance becomes a prerequisite for participation. Enforcement manifests as friction, delay, and routing failure.

This connects to the broader architecture:

Perception (Input): Satellite tracking, AIS data, financial intelligence identify cargo movements

Modelling (Process): Risk scoring determines which shipments warrant intervention

Decision (Output): Clearance granted or denied at chokepoints (ports, insurance, banking correspondents)

Feedback: Outcomes refine targeting for subsequent interdiction

The same loop operates here at the level of economic enforcement — and the loops are becoming faster than the political processes that might contest them.

Project Rosalind70 (BIS, Bank of England) demonstrated API designs for conditional retail CBDC payments: three-party locks where conditions must clear before transactions complete. The Venezuelan interdiction model shows what conditionality looks like when applied to physical trade flows.

Meridian71 is the settlement primitive for that logic. Where interdiction operates as a denial of service in physical flows, Meridian demonstrates how financial flows can be orchestrated across ledgers: a Synchronisation Operator coordinates RTGS money with assets on an external ledger so they settle together, only when defined conditions are met72. It doesn’t create the policy or decide the conditions; it makes conditionality enforceable at the rail level once upstream systems (compliance rules, sanctions screening, contractual triggers, authorisations) define what must clear73.

In other words: permission lives upstream; orchestration lives here. The significance is that orchestration compresses enforcement from ‘institution-by-institution plumbing’ into an API-shaped control surface.

The distance between ‘your shipment requires additional clearance’ and ‘your transaction requires compliance verification’ is architectural, not conceptual.

Clearance is the actuator: whether the object is a payment, a shipment, or a person, the mechanism is the same — permission embedded in infrastructure. When the loop closes fully — AI perception, data fusion, conditional clearance, automated enforcement — the question of who authorised the intervention becomes structurally difficult to answer.

The system simply intervenes because the parameters indicated intervention. Policy becomes what the optimisation surface does.

The binding equation:

AI cognition + identity resolution + conditional clearance = executable governance.

Three layers, one architecture. The perception layer determines what the system sees; the fusion layer determines who the system knows; the actuation layer determines what the system does. Connect them through shared infrastructure — cloud platforms, accreditation regimes, procurement frameworks — and the loop closes.

Integration happens through compatibility.

Why Now

Three conditions converged to make this window possible:

Capability threshold: Frontier models crossed into operational utility — the gap between ‘interesting demo’ and ‘deployable system’ closed in 2024-2025. GenAI.mil is enterprise infrastructure; Genesis is a closed-loop experimentation platform. The technology matured enough to justify procurement at scale.

Procurement infrastructure: Federal AI acquisition frameworks (OMB M-25-2274, IL5 accreditation, FedRAMP equivalencies) matured in parallel. The question shifted from ‘can we buy this?’ to ‘how fast can we deploy it?’ Vendors had already built the compliance stack. The path from contract to operational deployment compressed from years to months.

Crisis tempo: Ukraine, Gaza, supply chain vulnerabilities, great-power competition — each provides justification for speed that bypasses deliberation. ‘We don’t have time for extensive review’ is the logic of emergency, and emergency has become the baseline operating condition. Oversight mechanisms designed for peacetime procurement cycles cannot keep pace with wartime deployment timelines.

Energy substrate: On December 18, FERC moved to force PJM to clarify and revise rules around co-locating large loads with generation—explicitly in the context of data centers and reliability75. Read structurally, this is the governance analogue of an emergency interface: rather than waiting for slow grid queues and fragmented utility processes, the regulator compels new rules so compute can plug in at speed. The hardware layer is being cleared in parallel with the software layer.

Co-location is the electrical equivalent of an OTA — a fast lane that exits public infrastructure. By plugging data centers directly into generation, ‘cockpit’ operators bypass multi-year interconnection queues and public utility oversight. When FERC directs PJM to revise rules for co-location, it codifies the right of the cognitive layer to exist outside the constraints of the civilian grid. Reliability is the ethical wrapper; physical decoupling is the result. The cockpit gets guaranteed power; the passengers get demand response mandates and price volatility.

The UK made this explicit in September 202476: data centers designated as critical national infrastructure, eligible for protected status during load shedding. Residential customers remain in the rota — planned power cuts in three-hour blocks. The tiering is already policy.

None of these conditions required coordination: compute scaled because investment flowed, procurement matured because vendors needed government contracts, crisis tempo persisted because crises persisted, energy bottlenecks triggered regulatory patches. The convergence is structural — which is precisely what makes it difficult to contest. But the priorities are clear: data centers are simply more important.

Velocity

The original essays described a trajectory; the past twelve days demonstrated it.

Dec 9: GenAI.mil launches with Gemini

Dec 10: $320M Genesis Mission investment

Dec 10: OPM/OMB Core HCM consolidation directive

Dec 11: Trump signs AI preemption EO

Dec 11: UK-DeepMind partnership announced

Dec 18: Genesis Mission: 24 vendor MOUs

Dec 18: FERC co-located load order (data center power)

Dec 19: New York signs RAISE Act

Dec 20-21: Miami talks: Gaza + Ukraine

Dec 20: SDA Tranche 3 Tracking Layer awards

Dec: HHS RFI on AI deployment in clinical care

Dec: OPM ‘US Tech Force’ surge hiring announced

Dec 22: GenAI.mil xAI expansion announced

Dec 22: Turkey confirms Gaza Phase 2 timeline

Two weeks. Platform deployment, vendor consolidation, cross-theater settlement negotiation, federal-state collision, and international parallel rollout — all running simultaneously.

The essays asked what stops these systems from becoming a single optimisation surface. The answer emerging from the past two weeks: less than we thought.

The Observation

The past twelve days demonstrate acceleration: infrastructure deploying faster than the essays anticipated, vendor consolidation broader, cross-theater integration more explicit, state-level resistance already being litigated.

Friction points remain. Procurement delays could slow deployment. Security certification bottlenecks could stall platform expansion. Court injunctions could complicate state preemption. Budget fights could constrain Genesis funding. Inter-agency turf wars could fragment data sharing. Allied governments may resist infrastructure standardisation. Technical failures — hallucinations, security breaches, integration bugs — could trigger rollbacks. None of this is predetermined.

But the structural pressure is asymmetric. Every friction point creates demand for solutions that accelerate the architecture: procurement delays justify emergency authorities, certification bottlenecks justify streamlined processes, budget fights justify efficiency arguments, technical failures justify expanded investment in ‘getting it right’. The obstacles are real — the question is whether they slow the trajectory or provide justification for intensifying it.

None of this required announcement. It arrived as procurement, partnership, and operational deployment — below the threshold of political debate, above the speed of legislative response.

The metadata of governance

The most telling sign of this shift isn’t in the headlines — it’s in the job descriptions.

The ‘US Tech Force’ surge (1,000 technologists on two-year terms, $130K–$200K salaries) is the staffing of the operator cell at scale. Within days of announcement, OPM reported approximately 25,000 expressions of interest77 — 25x oversubscription. These aren’t permanent policy roles — they’re temporary actuators placed to make the stack operational. The job descriptions say it explicitly: ‘closing implementation gaps’, ‘embedding in agencies to drive operational AI use’, ‘upgrading government systems for AI supremacy’.

Two-year terms create urgency; private-sector partnerships (Palantir, xAI, Google, OpenAI) for training and post-service placement keep the human operators synced with frontier capabilities.

The pattern extends across agencies:

DoD/War Department: AI Integration Specialists for ‘GenAI.mil enterprise onboarding’, data scientists for ‘multi-model federation and agentic tool deployment’. Requirements include IL5-accredited environments, real-time data stream integration, and ‘daily battle rhythm’ AI workflows. Many require Palantir Foundry/AIP experience.

DOE National Labs: ‘Closed-loop AI experimentation engineers’ and ‘autonomous laboratory systems integrators’ at PNNL and LLNL. Job descriptions reference Genesis Mission priorities directly — building hypothesis-generation → robotic execution → model refinement pipelines.

Cross-Agency Data Platforms: ‘Enterprise data fusion specialists’ in civilian agencies (USCIS, IRS, HHS) focused on ‘cross-domain identity resolution’ and ‘executable workflow orchestration’ — the integration layer connecting perception to actuation.

Treasury: IT Specialist (AI) postings requiring candidates to ‘lead and coordinate cross-agency AI engineering projects’ and demonstrate ‘alignment of emerging technology adoption with Administration priorities’. Term-limited: 2 years, extendable to 478.

Three role archetypes deserve specific attention:

The Preemption Litigator (DOJ AI Litigation Task Force): Not just constitutional lawyers — postings seek ‘Technical Subject Matter Experts’ with experience in AI model interpretability and algorithmic outputs. Their remit is to ‘verify’ whether state laws require AI models to ‘alter truthful outputs’ — framing model safety as a federal free speech violation. Operators tasked with dismantling state-level oversight using technical definitions of ‘truth’ as legal weapons.

The Fraud-as-a-Graph Scientist (USCIS VOWS Platform): Data scientist postings emphasising ‘graph theory’ and ‘automated records processing’. By treating immigration as a graph-matching problem, the system moves from ‘detecting fraud’ to preemptively denying clearance based on network anomalies — perception turned into an automated gatekeeper.

The Agentic Architect (HHS OneHHS): ‘AI Strategy Implementation Leads’ reporting to the Deputy Secretary’s AI Governance Board, building ‘Agentic AI capabilities’ to automate FDA pre-market reviews and post-market surveillance. The human handles exceptions; the agent handles the baseline.

The Identity Graph emerges not from a master database — which would trigger legislative alarm—but from administrative compatibility. When USCIS, IRS, and HHS use the same IL5-accredited fusion tools, identity resolution happens as a technical default. Coordination without a memo. The graph perceives behaviour (financial transactions, travel patterns, family formation), models risk (network anomalies, trust scores), and actuates access (clearance, benefits, e-wallet unlocks). By the time the legal fight over digital identity reaches the courts, the graph will already be the functional prerequisite for receiving a tax refund, crossing a border, or opening an e-wallet.

The ‘tour of duty’ framing is structurally significant. By limiting these roles to 1-2 year terms, the state:

Bypasses civil service bottlenecks: Rapidly infusing talent that hasn’t been institutionalised by existing bureaucracy

Creates a technical reserve: A fleet of technologists who will return to the private sector (Google, xAI, Palantir) having built the very government systems their companies now operate

Call it the revolving door API. These technologists aren’t permanent civil servants; they’re career-builders whose primary incentive is ensuring the systems they build are compatible with the tools they’ll use when they return to the private sector in 24 months. The state’s architecture gets rewired to think in vendor language — Palantir’s Ontology, Google’s Vertex AI, OpenAI’s function calls as the baseline for policy implementation. By the time they leave, the dependency is structural.

The pattern is transatlantic. UK government postings show the same migration: Ministry of Justice hiring a ‘Head of AI Governance’79 to build portfolio rules, risk registers, and lifecycle controls — governance as operating layer, not ethics document. UKHSA advertising for ‘Identity Automation Lead’ where ‘automation is the cornerstone’ of identity management — permissions becoming programmable through Graph API and DevOps pipelines. When governance work is redescribed as engineering work (pipelines, automation, APIs, lifecycle controls), that’s the migration: policy debates → implemented defaults.

The EU has named this directly: a European Parliament study on ‘algorithmic accountability’ warned that these systems risk ‘undermining meaningful scrutiny and accountability’80. The diagnosis is accurate. The framework arrives at legislative speed; the architecture deploys at procurement speed.

The surge timing — right after GenAI.mil/xAI integration and Genesis MOUs — isn’t coincidental. It’s the staffing push to lock in velocity through the transition window.

Watch the next 28 days through the January 20 inauguration anniversary window. The pattern suggests a ‘midnight procurement’ surge — a rush to sign MOUs and finalise accreditation (IL5/ATO) for systems that become harder to cancel once operational. Infrastructure that’s running is infrastructure that persists.

The endpoint isn't universal lockstep — except during declared emergencies. Under normal conditions, it's stratified clearance: some actors get frictionless throughput (fast lanes, fewer audits, broader permissions), everyone else gets governance by friction. But when emergency is declared, the tiers collapse. And once tiering exists, 'freedom' becomes 'you're in the high-trust lane', not 'the rules changed’.

The question isn’t whether the system becomes tiered. It’s whether the top tier recognises tiering as governance, and under which conditions.

Infrastructure as fact. By the time a future administration or court ruling attempts to regulate these systems — 2028, 2030 — they will find the systems operationally inseparable from the state. You cannot unplug the model without crashing the benefits system, the border-vetting platform, the power grid, the settlement architecture. The next decade won’t be governed by laws debated in legislatures but by authorised software versions and API documentation. The window for contestation is now, while the stack is still being installed.

Spaceship Earth

There’s a frame that makes all of this legible.

If you believe the planet is a closed system with finite resources, accelerating crises, and populations that can’t be trusted to coordinate through deliberative politics — then automated anticipatory governance isn’t a bug. It’s the design specification.

The loop makes sense as planetary management:

Perception: Satellites, sensors, data streams — comprehensive awareness of resource flows, population movements, economic activity, climate systems, threat vectors

Prediction: Digital Twins and AI models generating predictive hypotheses about what happens next — before it happens

Actuation: Clearance systems that route flows toward desired outcomes — transactions that clear, shipments that arrive, people who can move, reconstruction funds that disburse

Feedback: Outcomes refine the models, tighten the parameters, improve the predictions

Genesis isn’t just ‘AI for science’. It’s the knowledge-generation layer — energy, materials, manufacturing, biology. The capacity to understand and manipulate physical systems at scale.

Gaza isn't just a settlement. It's a pilot program — population governance through certification, reconstruction through digital identity, sovereignty through audit. Ukraine's 28-point plan runs the same template: Peace Council, conditional security guarantees, externally-set parameters. Venezuela is watching the Caribbean interdictions and drawing conclusions.

GenAI.mil isn’t just enterprise software. It’s three million operators trained to treat AI as ‘teammate’ — the human-machine interface normalised. Until the AI has been trained, and AI’s ‘teammates’ are no longer required.

The CBDC rails, the conditional clearance APIs, the sanctions enforcement, the immigration graph-matching — these are the actuators. The mechanisms that translate model outputs into material consequences.

The thesis isn’t ‘bad people are building a control system’.

The thesis is: if you accept the premise that Earth must be managed as a system, this is what management looks like. And the premise is increasingly ambient — in climate discourse, pandemic response, great-power competition, AI safety. The emergency framing that justifies speed over deliberation.

‘We don’t have time for politics’ is the ideology. The architecture is the implementation.

Accreditation is the primary tool of power. Not merely a bureaucratic checkbox — it’s the apex of power. The power of stating what should be done. ‘Inclusive capitalism’ will include only what’s got the correct credentials. It’s a power of exclusion.

The pattern is the same at every scale: if your AI model isn’t IL5/FedRAMP certified, it doesn’t exist in the federal stack. If your company isn’t on the vendor list, you can’t bid. If your state law isn’t ‘consistent with federal policy’, you face preemption litigation. If your digital identity doesn’t clear, you don’t receive reconstruction funds. If your nation’s governance doesn’t meet certification conditions, you don’t get security guarantees.

Existence within the system is contingent on passing the accreditation check. Whoever sets the certification parameters sets the boundaries of operational reality.

The operating logic:

Identity + Accreditation creates the model — who you are, how far you can reach (general systems theory)

Data + Audit produces the clearing — what you did versus what you’re allowed, the balancing of ‘is’ versus ‘ought’ (using input-output analysis)

Finance + Allocation + Enforcement executes the settlement — resources flow or don’t, access granted or denied (cybernetics)

Finance is the actuator, soon to be fully cybernetic. Conditional APIs, programmable money, real-time clearing. The Meridian pattern: orchestration at the rail level, settlement in milliseconds.

Full automated Spaceship Earth, running on adaptive management.

The four-layer loop is adaptive management: the governance methodology that treats intervention as experiment, outcomes as data, and policy as parameters to be tuned. What ecology proposed for ecosystems, the architecture implements for populations.

But who sits in the control room81?

That’s where it gets uncomfortable. The essay documents infrastructure being built, parameters being set. But the question of democratic accountability is... not even rejected. Just seemingly architecturally irrelevant. The system doesn’t need your vote. It needs your data.

The people setting parameters now — the two-year technologists, the operator cells, the accreditation bodies, the vendor partnerships — aren’t elected. They’re not even visible. They’re ‘closing implementation gaps’.

The Spaceship Earth framing has a corollary:

If the planet is a spaceship, most people are passengers. Some people are crew. And someone is in the cockpit.

The architecture decides who’s who. And it decides it through clearance, not citizenship.

The passenger whose benefits don’t clear. The patient whose treatment isn’t reimbursed because the model scored it unnecessary. The homeowner whose power sheds so the data center keeps running. They won’t see a decision. They’ll see a routing error, a processing delay, additional verification required. Weather.

The loop closes in seconds. A satellite sees movement; the fusion engine resolves identity; the model evaluates against Board parameters; the financial rail actuates. Sixty seconds from perception to consequence.

The passenger sees a failed transaction — weather, a routing error in an increasingly complex world. The crew sees a successful API callback. The cockpit sees a cleared dashboard. Policy has been replaced by parameters.

And if you want to change the world in 2026, you don’t campaign for a vote; you bid for an ATO82.

Appendix: Anticipating Anticipatory Governance

Christmas Week Watchlist + Scorecard (Dec 23 – Jan 24)

The essays documented pattern; this appendix operationalises it. What follows are testable predictions derived from the structural logic above. Check back January 24 to score.

Anchoring Deadlines

These aren’t predictions. They’re already scheduled.

Dec 29: Agency AI acquisition policy update deadline (OMB M-25-22, 270 days from Apr 3)

Jan 10: AI Litigation Task Force formation deadline (EO, 30 days from Dec 11)

Jan 14: Genesis Mission RFI #1 closes

Jan 23: Genesis Mission RFI #2 closes

~Mar 11: Commerce ‘onerous laws’ evaluation due (EO, 90 days)

~Mar 11: BEAD-related policy notice due (EO, 90 days)

Predictions by Domain

Platform Layer (GenAI.mil + Genesis)

GenAI.mil publishes ‘authorised tools/applications’ list: 70%

PDF/bulletin naming approved apps or onboarding rulesGenAI.mil training mandate announced (‘AI champions’, quotas): 80%

Directive requiring unit-level AI training or designated personnelModel federation signaling (Claude/ChatGPT): 60%

’Integration underway’ / ‘pilot cohorts’ / ‘pending IL5’ languageGenesis ‘national challenge’ domain named: 50%

DOE names grid/minerals/semiconductors/bio as first challengeGenesis partner count increases (24 → 30+): 70%

New MOUs announced before RFI deadlines close

Integration Layer (Palantir + Agency AI)

Contract modifications on SAM.gov (AI/data): 80%

Expansions, OTAs, sole-source filings for AI vendorsPalantir Phase 1 contract (USCIS or other civil agency): 50%

Follow-on to ‘VOWS’ Phase 0 or similar civil agency award‘Single surface’ signal (Genesis ↔ GenAI.mil): 30%

Any language on DOE-DOD AI interoperability or shared accreditation

Operator Cell (Ukraine + Gaza)

Gaza Board of Peace staffing announced: 50%

Liaison names, working group formation, ‘operationalization’ languageUkraine ‘framework’ language surfaces: 40%

Phased conditions, security guarantees scaffold (not final terms)Gaza gas monetization confirmed: 30%

Reuters/AP/WSJ confirms ADNOC stake or gas-to-reconstruction

Preemption Layer

AI Litigation Task Force roster published: 80%

Named staff, office placement, remit framingCommerce ‘onerous laws’ groundwork visible: 50%

Guidance document, preliminary list, or NTIA statements

International

UK-US AI coordination announcement: 40%

Joint statement on research coordination or ‘allied AI’

Where to Verify

SAM.gov

Contract awards, modifications, sole-source justificationsDefense.gov (defense.gov/News/Contracts)

Daily DOD contract announcementsFederal Register (federalregister.gov)

Regulatory guidance, interim rules, policy memorandaWar.gov

GenAI.mil announcements, DOD AI policyEnergy.gov

Genesis Mission updates, vendor partnershipsDOJ.gov (justice.gov)

Task Force announcements, litigation signalsGov.uk

UK parallel deployments, coordination announcements

Phrase Watchlist

Linguistic markers that signal movement:

Platform: ‘IL5 authority’, ‘ATO’, ‘authorized models’, ‘initial operating capability’, ‘enterprise onboarding’, ‘AI champions’

Integration: ’enterprise agreement’, ‘data platform’, ‘fusion center’, ‘cross-domain’

Operator Cell: ‘working group’, ‘implementation steps’, ‘operationalization’, ’stabilisation force’, ‘anchor funding’

Preemption: ‘inconsistent with national AI policy’, ’eligibility conditions’, ‘fragmenting innovation’

Confidence Key

0.8+ = Near-certain (hard deadline or explicit pipeline)

0.6-0.7 = High likelihood (pattern-consistent, low-attention window optimal)

0.4-0.5 = Medium likelihood (logical but speculative)

0.3 = Wildcard (significant if confirmed)

The Meta-Prediction

Over the next 7-14 days: quiet platform expansion (training mandates, certification artifacts, onboarding documentation), procedural consolidation (implementation groups, staffing, liaison roles), and symbolic preemption skirmishes — while the real work continues as procurement and rollout.

The attention deficit is the feature.

January 24 scorecard: If ≥9 of 14 predictions verify, the structural thesis holds. If ≤3 verify, we’re pattern-matching noise.

A Digital World Order

We have moved from planning to implementation, and the six rails are being fused with increasingly capable computational (artificial) intelligence. This fusion aims to create a world where governance is no longer reactive, but predictive, and human behavior is pre-emptively shaped by algorithmic decree